Turning a sea of public and private data

into organizational action and global change

The world is complex. Intergovernmental organizations have to work with diverse agencies in dozens of countries. Coordination on this level means pulling together disparate sources into clean, coherent data, to help make better decisions both at a policy and an operational level.

Whether you’re organizing ground support for a natural disaster zone, or compiling statistical reports in Excel, the quality and timeliness of your data is key. That’s why intergovernmental organizations around the world are using ScraperWiki to gather and present their data.

Intergovernmental organizations are using ScraperWiki to:

- Gather and clean statistical indicators from diverse agencies and countries

- Improve internal processes to source new data in innovative ways

- Visualize patterns and trends in data on a global scale

Contact sales

Case Study: UN-OCHA – Humanitarian Data Exchange

The UN Office for the Coordination of Humanitarian Affairs (UN-OCHA) is responsible for bringing together humanitarian actors to ensure a coherent response to emergencies, such as earthquakes and disease outbreaks.

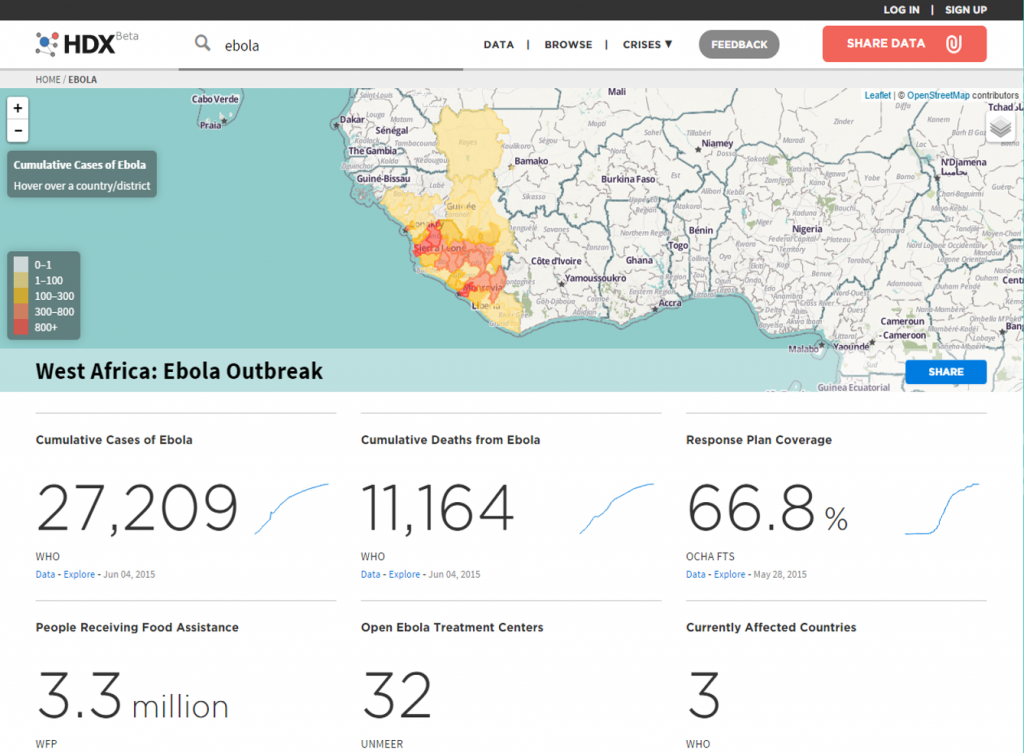

OCHA came to ScraperWiki while setting up the Humanitarian Data Exchange (HDX). The project is increasing data sharing across a wide range of governmental and non-governmental organisations, charities and commercial providers.

ScraperWiki initially collected the underlying baseline data. We automated compilation of detailed country indicators like child mortality, infrastructure, and healthcare, from 20 different websites including the World Bank and UNICEF.

ScraperWiki is now responsible for the worldwide technical management of the project. We ensure the distributed development team is effective, and continues to be driven by user needs. We promote use of good metrics to measure project progress.

HDX is based on CKAN, a data management system that makes data accessible and which provides tools to streamline publishing, sharing, finding and using data. ScraperWiki and its staff have extensive experience of building and using such systems since the open data movement started in 2003.

HDX is growing in usage, particularly during crises such as the Ebola epidemic and Nepal Earthquakes.

Case Study: OECD – Services Trade Restrictiveness Index Discovery (STRI)

The Organisation for Economic Co-operation and Development (OECD) aims to stimulate economic progress and world trade.

In the last few months ScraperWiki has been begun providing some services to the OECD. It has carried out “Discovery” work with the OECD’s Services Trade Restrictiveness Index (STRI) team. Discovery is a process in the Agile framework for establishing a project’s scope based on a short piece of work involving the customer and appropriate specialists. This Discovery was aimed at examining the process used to construct the STRI and identify how that process might be improved or automated.

The STRI for a country is constructed by assessing its legislation against a set of questions with either simple yes/no answers or, less frequently, a numerical answer such as a period of years. The answers to these questions are stored in an MS Access database along with a reference to the source of the answer ideally a URL to the specific piece of legislation.

- re-structuring the MS Access database to cleanly separate URLs to legislation from commentary and human readable references;

- Automated checking of URLs and download of content from URLs with alerting when URLs became unavailable;

- A “uniform” search system which would give access to the content downloaded and provide automated searching on keyword or phrase lists;

This project demonstrated our ability to work remotely with the OECD team to a defined timescale, and produce tangible artifacts beyond reporting.

Leading on from this Discovery phase we have proposed a project of three components to automate the construction of the STRI.

Sarah Telford

Sarah Telford