Learning to code bots at ONS

The Office for National Statistics releases over 600 national statistics every year. They came to ScraperWiki to help improve their backend processing, so they could build a more usable web interface for people to download data.

We created an on-premises environment where their numerate staff learnt a minimal amount of coding, and now create short scripts to transform data they didn’t have the resource to previously.

Matthew Jukes, Head of Product, Office for National Statistics said:

Who knew a little Python app spitting out CSVs could make people so happy but thank you team @ScraperWiki – great stuff 🙂

Spreadsheets

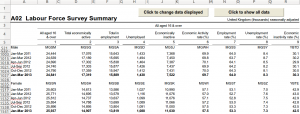

The data the team were processing was in spreadsheets which look like this:

They needed to turn them into a standard CSV format used internally at the ONS. Each spreadsheet could have 10,000s of observations in it, turning into an output file with that many database rows.

We created an on-premises ScraperWiki environment for the ONS, using standard text editors and Python. Each type of spreadsheet needs one short recipe writing, which is just a few lines of Python expressing the relative relationship of headings, sub-headings and observations.

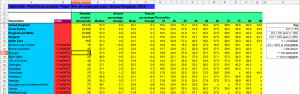

The environment included a coloured debugger for identifying that headings and cells were correctly matched:

Most of the integration work involved making it easy to code scripts which could transform the data ONS had – coping with specific ways numbers are written, and outputting the correct CSV file format.

Training

As part of the deployment, we gave a week of hands on script development training for 3 members of staff. Numerate people learning some coding is, we think, vital to improving how organisations use data.

Before the training, Darren Barnes (Open Datasets Manager) said learning to code felt like crossing a “massive chasm”.

Within a couple of hours he was write scripts that were then used operationally.

He said it was much easier to write code than to use the data applications with complex graphical interface he often has to work with.

Conclusion

Using graphical ETL software, it took two weeks for an expert consultant to make the converter for one type of spreadsheet. With staff in the business coding Python in ScraperWiki’s easy environment themselves, it takes a couple of hours.

This has saved the ONS time for each type of spreadsheet for the initial conversion. When new statistics come out in later months, those spreadsheets can easily be converted again, with any problems fixed quickly and locally, saving even more.

The ONS have made over 40 converters so far. ScraperWiki has been transformational.

No comments yet.