Running a code club at DCLG

The Department for Communities and Local Government (DCLG) has to track activity across more than 500 local authorities and countless other agencies.

They needed a better way to handle this diversity and complexity of data, so decided to use ScraperWiki to run a club to train staff to code.

Martin Waudby, data specialist, said:

I didn’t want us to just do theory in the classroom. I came up with the idea of having teams of 4 or 5 participants, each tasked to solve a challenge based on a real business problem that we’re looking to solve.

The business problems being tackled were approved by Deputy Directors.

Phase one

The first club they ran had 3 teams, and lasted for two months so participants could continue to do their day jobs whilst finding the time to learn new skills. They were numerate people – statisticians and economists (just as in our similar project at the ONS). During that period, DCLG held support workshops, and “show and tell” sessions between teams to share how they solved problems.

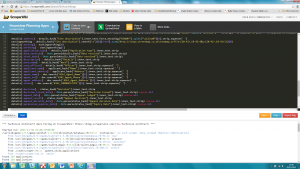

As ever with data projects, lots of the work involved researching sources of data and their quality. The teams made data gathering and cleaning bots in Python using ScraperWiki’s “Code in Browser” product – an easy way to get going, without anything to install and without worrying about where to store data, or how to download it in different formats.

Here’s what two of the teams got up to…

Team Anaconda

The goal of Team Anaconda (they were all named after snakes, to keep the Python theme!) was to gather data from Local Authority (and other) sites to determine intentions relating to Council Tax levels. The business aim is to spot trends and patterns, and to pick up early on rises which don’t comply with the law.

Local news stories often talk about proposed council tax changes.

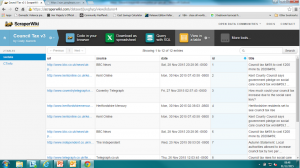

The team in the end set up a Google alert for search terms around council tax changes, and imported that into a spreadsheet. They then downloaded the content of those pages, creating an SQL table with a unqiue key for each article talking about changes to council tax:

They used regular expressions to find the phrases describing a percentage increase / decrease in Council Tax.

The team liked using ScraperWiki – it was easy to collaborate on scrapers there, and easier to get into SQL.

The next steps will be to restructure the data to be more useful to the end user, and improve content analysis, for example by extracting local authority names from articles.

Team Boa Constrictor

It’s Government policy to double the number of self-built homes by 2020, so this team was working on parsing sites to collect baseline evidence of the number being built.

They looked at various sources – quarterly VAT receipts, forums, architecture websites, sites which list plots of land for sale, planning application data, industry bodies…

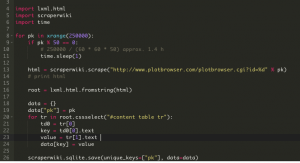

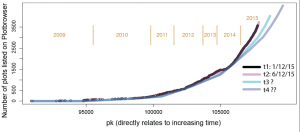

The team wrote code to get data from PlotBrowser, a site which lists self-build land for sale.

And analysed that data using R.

They made scripts to get planning application data, for example in Hounslow. Although they found the data they could easily get from within the applications wasn’t enough for what they needed.

They liked ScraperWiki, especially once they understood the basics of Python.

The next step will be to automate regular data gathering from PlotBrowser, and count when plots are removed from sale.

Phase two

At the end of the competition, teams presented what they’d learnt and done to Deputy Directors. Team Boa Constrictor won!

The teams developed a better understanding of the data available, and the level of effort needed to use it. There are clear next steps to take the projects onwards.

DCLG found the code club so useful, they are running another more ambitious one. They’re going to have 7 teams, extending their ScraperWiki license so everyone can use it. A key goal of this second phase is to really explore the data that has been gathered.

We’ve found at ScraperWiki that a small amount of coding skills, learnt by numerate staff, goes a long way.

As Stephen Aldridge, Director of the Analysis and Data Directorate, says:

ScraperWiki added immense value, and was a fantastic way for team members to learn. The code club built skills at automation and a deeper understanding of data quality and value. The projects all helped us make progress at real data challenges that are important to the department.