How to get along with an ASP webpage

Fingal County Council of Ireland recently published a number of sets of Open Data, in nice clean CSV, XML and KML formats.

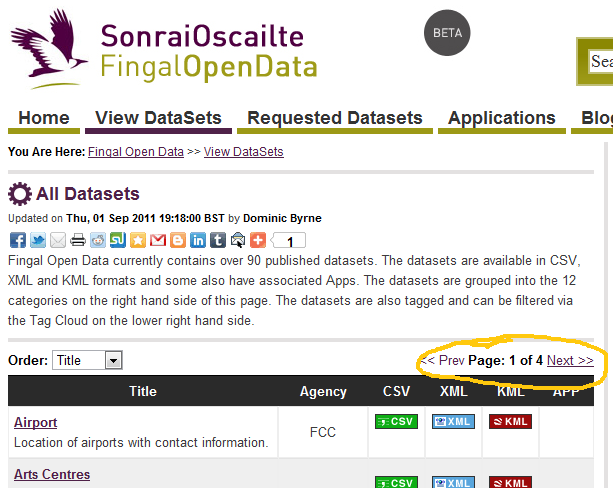

Unfortunately, the one set of Open Data that was difficult to obtain, was the list of sets of open data. That’s because the list was separated into four separate pages.

The important thing to observe is that Next >> link is no ordinary link. You can see something is wrong when you hover your cursor over it. Here’s what it looks like in the HTML source code:

<a id="lnkNext" href="javascript:__doPostBack('lnkNext','')">Next >></a>

What it does (instead of taking the browser to the next page) is execute the javascript function __doPostBack().

Now, this could take a long time to untangle by stepping through the javascript code to the extent that it would be a hopeless waste of time, but for the fact that this is code generated by Microsoft and there are literally millions of webpages that work in exactly the same way.

This __doPostBack() javascript function is always on the page and it’s always the same, if you look at the HTML source.

<script type="text/javascript">

//<![CDATA[

var theForm = document.forms['form1'];

if (!theForm) {

theForm = document.form1;

}

function __doPostBack(eventTarget, eventArgument) {

if (!theForm.onsubmit || (theForm.onsubmit() != false)) {

theForm.__EVENTTARGET.value = eventTarget;

theForm.__EVENTARGUMENT.value = eventArgument;

theForm.submit();

}

}

//]]>

</script>

So what it’s doing is putting the two arguments from the function call (in this example ‘lnkNext’ and ”) into two values of the hidden form called “form1” and then submitting the form back to the server as a POST request.

Let’s try to look at the form. Here is some Python code which ought to do it.

import mechanize

br = mechanize.Browser()

br.open("http://data.fingal.ie/ViewDataSets/")

br.select_form("form1")

print br.form

Unfortunately this doesn’t work, because the form is has no name. Here is how it appears in the HTML:

<form method="post" action="" id="form1"> <div class="aspNetHidden"> <input type="hidden" name="__EVENTTARGET" id="__EVENTTARGET" value="" /> <input type="hidden" name="__EVENTARGUMENT" id="__EVENTARGUMENT" value="" /> <input type="hidden" name="__LASTFOCUS" id="__LASTFOCUS" value="" /> <input type="hidden" name="__VIEWSTATE" id="__VIEWSTATE" value="/wEPDwUKMjA4MT... insanely long ascii string /> ...the entire rest of the webpage... </form>

The javascript is selecting it by the id which, unfortunately, mechanize doesn’t allow. Fortunately there is only one form in the whole page, so we can select it as the first form on the page:

import mechanize

br = mechanize.Browser()

br.open("http://data.fingal.ie/ViewDataSets/")

br.select_form(nr=0)

print br.form

What do we get?

<POST http://data.fingal.ie/ViewDataSets/ application/x-www-form-urlencoded <HiddenControl(__VIEWSTATE=/wEPDwUKMjA4... and so on ) (readonly)> <HiddenControl(__EVENTVALIDATION=/wEWVQK... and so on ) (readonly)> <TextControl(txtSearch=Search DataSets)> <TextControl(txtSearch=Search DataSets)> <SubmitControl(btnSearch=Search) (readonly)> <SelectControl(ddlOrder=[*Title, Agency, Rating])>>

Oh dear. What has happened to the __EVENTTARGET and __EVENTARGUMENT which I am going to have to put values in when I am simulating the __doPostBack() function?

I don’t really know.

What I do know is that if you insert the following line:

br.addheaders = [('User-agent', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.1) Gecko/2008071615 Fedora/3.0.1-1.fc9 Firefox/3.0.1')]

just before the line that says br.open() to include some headers that are recognized by the Microsoft server software, then you get them back:

<POST http://data.fingal.ie/ViewDataSets/ application/x-www-form-urlencoded <HiddenControl(__EVENTTARGET=) (readonly)> <HiddenControl(__EVENTARGUMENT=) (readonly)> <HiddenControl(__LASTFOCUS=) (readonly)> ...

Right, so all we need to do to get to the next page is fill in their values and submit the form, like so:

br["__EVENTTARGET"] = "lnkNext" br["__EVENTARGUMENT"] = "" response = br.submit() print response.read()

Woops, that doesn’t quite work, because those two controls are readonly. Luckily there is a function in mechanize to make this problem go away, which looks like:

br.set_all_readonly(False)

So let’s put this all together, including pulling out those special values from the __doPostBack() javascript to make it more general and putting it into a loop.

How about it?

import mechanize

import re

br = mechanize.Browser()

br.addheaders = [('User-agent', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.1) Gecko/2008071615 Fedora/3.0.1-1.fc9 Firefox/3.0.1')]

response = br.open("http://data.fingal.ie/ViewDataSets/")

for i in range(10):

html = response.read()

print "Page %d :" % i, html

br.select_form(nr=0)

print br.form

br.set_all_readonly(False)

mnext = re.search("""<a id="lnkNext" href="javascript:__doPostBack('(.*?)','(.*?)')">Next >>""", html)

if not mnext:

break

br["__EVENTTARGET"] = mnext.group(1)

br["__EVENTARGUMENT"] = mnext.group(2)

response = br.submit()

It still doesn’t quite work! This stops at two pages, but you know there are four.

What is the problem?

The problem is this SubmitControl in the list of controls in the form:

<SubmitControl(btnSearch=Search) (readonly)>

You think you are submitting the form, when in fact you are clicking on the Search button, which then takes you to a page you are not expecting that has no Next >> link on it.

If you disable that particular SubmitControl before submitting the form

br.find_control("btnSearch").disabled = True

then it works.

From here on it’s plain sailing. All you need to do is parse the html, follow the normal links, and away you go!

In summary

1. You need to use mechanize because these links are operated by javascript and a form

2. Clicking the link involves copying the arguments from __doPostBack() into the __EVENTTARGET and __EVENTARGUMENT HiddenControls.

3. You must set readonly to False so you can even write to those values.

4. You must set the User-agent header or the server software doesn’t know what browser you are using and returns something that can’t possibly work.

5. You must disable all extraneous SubmitControls in the form before calling submit()

Some of these tricks have taken me a day to learn and resulted in me almost giving up for good. So I am passing on this knowledge in the hope that it can be used. There are other tricks of the trade I have picked up regarding ASP pages that there is no time to pass on here because the examples are even more involved and harder to get across.

What we need is an ASP pages working group among the ScraperWiki diggers who take on this type of work. Anyone who is faced with one of these jobs should be able to bring it to the team and we’ll take a look at it as a group of experts with the knowledge. I expect problems to be disposed of within half an hour that would take someone who hasn’t done it before to take a week or give up before they’ve even got started.

This is how we can produce results.

I’ve wasted many hours of my life struggling with scraping of ASP pages in the past, it probably would have helped if I’d blogged about it and explained some of my work arounds! A support group sounds great but what would be even better would be if Microsoft didn’t use such an insane way of doing things!

Ruby mechanize is a little bit smarter than python as there is no need to disable that button.

What the scraper might look like in ruby:

agent = Mechanize.new

doc = agent.get ‘http://data.fingal.ie/ViewDataSets/’

while next_link = doc.parser.at(‘form a#lnkNext’)

break unless next_link[‘href’]

form = doc.forms[0]

form[“__EVENTTARGET”],form[“__EVENTARGUMENT”] = $1,$2 if next_link[‘href’] =~ /__doPostBack(‘(.*)’,'(.*)’/

doc = form.submit

end

Epic article Julian! I’ve wanted to write up something similar in the past but by the time I’ve got the scraper worked out I’m so over dealing with it that I can’t bring myself to write about it :-/

We’ve got a bunch of scrapers that scrape the same planning system albeit with tiny differences (“customisations” that councils no doubt pay shit loads for). What can we do to help?

It wasn’t clear in my original comment but the bunch of scrapers are scraping an ASP.NET-based system.

I am trying this method but there doesn’t seem to be a submit control on my page. Any ideas?