Introducing status.scraperwiki.com

So you can find out if parts of ScraperWiki aren’t working, we’ve added a new status page.

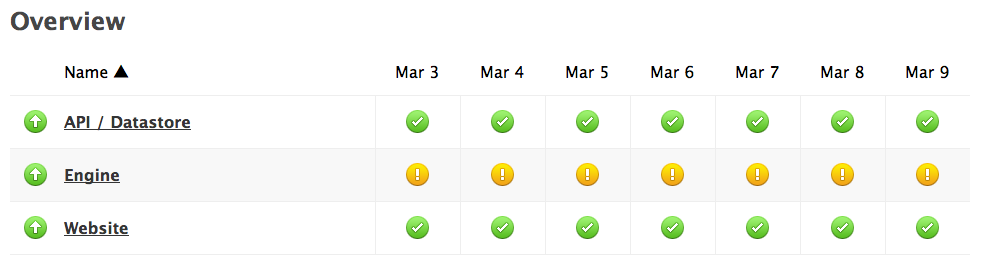

It’s called status.scraperwiki.com, and looks like this:

The page and the status monitoring is done by the excellent Pingdom. We’ve been using it for a while to alert us to outages, so there’s quite a bit of history there for you to browse if you follow the links.

Some explanation of what the service names mean…

- API / Datastore. Once a minute, Pingdom calls an API that does a simple SQL query on a scraper datastore. This shows whether that worked or not.

- Engine. This tests the sandboxed (using LXC) code executor that is the core of ScraperWiki. It does it via a ScraperWiki View, so those are being tested too.

- Website. Just does a check that the front page of scraperwiki.com works. It means our basic infrastructure, and the Django application, are working.

As you can see we’re in the naughty corner: yellow exclamation marks all over the Engine. If you hover the mouse over them, you can see we’ve had a downtime of 5-17 minutes for each day in the last week.

We’ve diagnosed it, and it is not the whole engine, just the dynamic views frontend. Occasionally there is high traffic from our geocoding API, which works via this View. We’re doing some short term optimisation to fix it, and medium term will move geocoding to something else, like GeoNames.

Anyway, next time ScraperWiki isn’t working for you, go to status.scraperwiki.com to find out what is wrong!

I continually get “Sorry, we couldn’t connect to the datastore” messages when viewing my scrapers. I can edit them and run them, but can’t look at the data. status.scraperwiki.com shows the datastore to be up and available. Any ideas?

Thanks.

Hi Frank! That’s likely something specific to your scrapers… Could you give a link to them?

See also this FAQ about slow datastores: https://scraperwiki.com/docs/python/faq/#slow_datastore

Sorry for being slow replying – your comment got stuck in WordPress’s spam trap and I only just spotted it!