Software Archaeology and the ScraperWiki Data Challenge at #europython

There’s a term in technical circles called “software archaeology” – it’s when you spend time studying and reverse-engineering badly documented code, to make it work, or make it better. Scraper writing involves a lot of this stuff. ScraperWiki’s data scientists are well accustomed with a bit of archaeology here and there.

But now, we want to do the same thing for the process of writing code-that-does-stuff-with-data. Data Science Archaeology, if you like. Most scrapers or visualisations are pretty self-explanatory (and an open platform like ScraperWiki makes interrogating and understanding other people’s code easier than ever). But working out why the code was written, why the visualisations were made, and who went to all that bother, is a little more difficult.

That’s why, this Summer, ScraperWiki’s on a quest to meet and collaborate with data science communities around the world. We’ve held journalism hack days in the US, and interviewed R statisticians from all over the place. And now, next week, Julian and Francis are heading out to Florence to meet the European Python community.

We want to know how Python programmers deal with data. What software environments do they use? Which functions? Which libraries? How much code is written to ‘get data’ and if it runs repeatedly? These people are geniuses, but for some reason nobody shouts about how they do what they do… Until now!

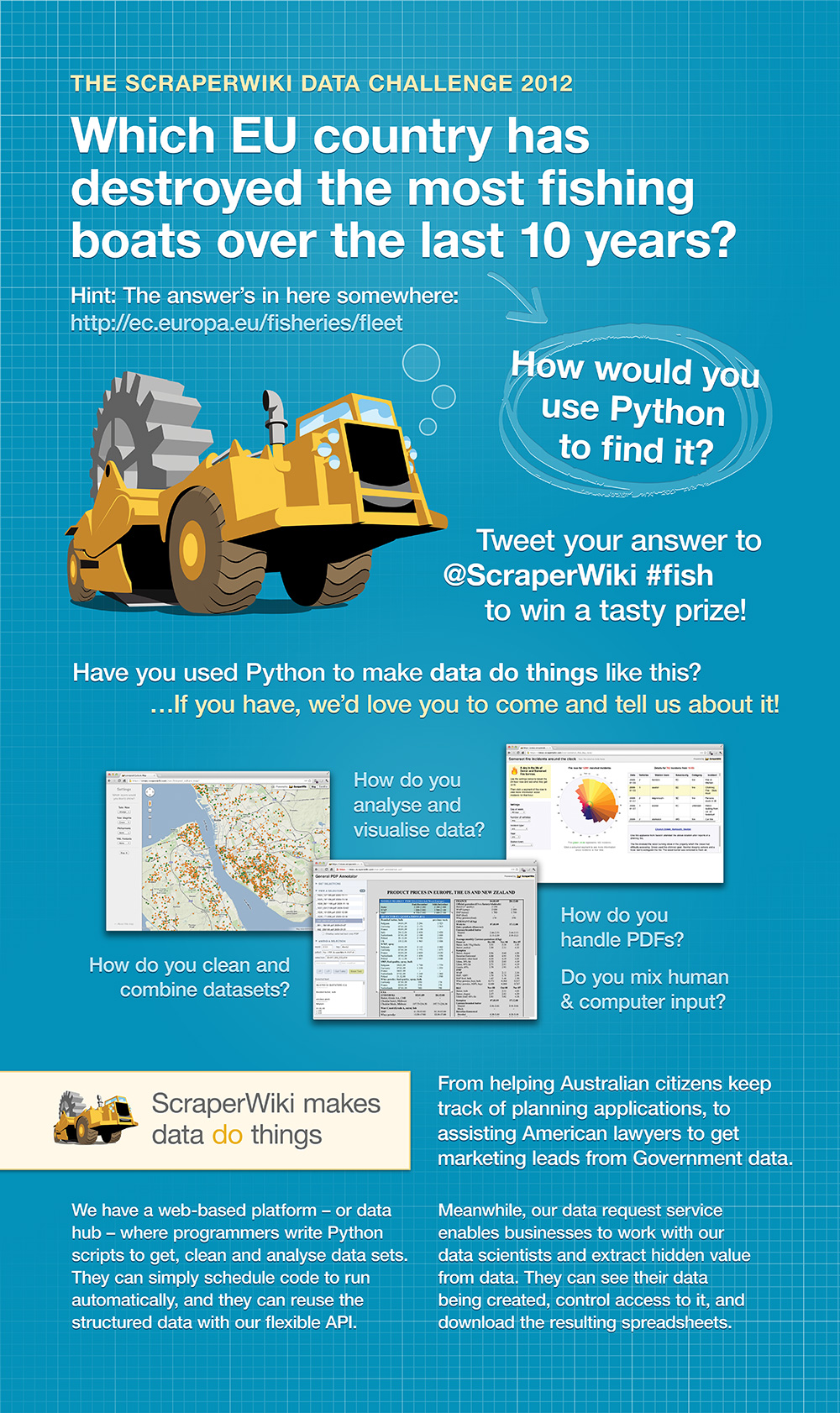

And, to coax the data science rock stars out of the woodwork, we’re setting a Data Challenge for you all…

In 2010 the BBC published the article about the ‘profound’ decline in fish stocks shown in UK Records. “Over-fishing,” they argued, “means UK trawlers have to work 17 times as hard for the same fish catch as 120 years ago.” The same thing is happening all across Europe, and it got us ScraperWikians wondering: how do the combined forces of legislation and overfishing affect trawler fleet numbers?

We want you to trawl (ba-dum-tsch) through this EU data set and work out which EU country is losing the most boats as fisherman strive to meet the EU policies and quotas. The data shows you stuff like each vessel’s license number, home port, maintenance history and transfer status, and a big “DES” if it’s been destroyed. We’ll be giving away a tasty prize to the most interesting exploration of the data – but most of all, we want to know how you found your answer, what tools you used, and what problems you overcame. So check it out!!

~~~

PS: #Europython’s going to be awesome, and if you’re not already signed up, you’re missing out. ScraperWiki is a startup sponsor for the event and we would like to thank the Europython organisers and specifically Lorenzo Mancini for his help in printing out a giant version of the picture above, ready for display at the Poster Session.

Python, python, … I’d love to see such ScraperWiki’s activity for php as well (I get the feeling that php is the last and just tolarated “child” of SW)

Hi Michal! You’re right, things are a lot quieter on the PHP front here at ScraperWiki, although that’s certainly not intentional – it’s just what most ScraperWiki coders use. 78% of ScraperWiki scrapers are written in Python, with PHP and Ruby accounting for another 11% each.

We’re working on some research right now of Twitter scrapers, and there the divide is even stronger. Of 57260 ScraperWiki scrapers which accessed twitter.com in the last month, only 99 were written in PHP. That’s less than 0.2%. By way of comparison, 722 (1.3%) were written in Ruby and 56439 (98.5%) were written in Python. There are a couple of reasons for this Python-centricity, which we’ll cover in the accompanying blog post.

If you’ve got any ideas as to how we could make ScraperWiki more useful to PHP coders, drop me an email: zarino@scraperwiki.com. I’m a PHP boy at heart, so would love to see it get more use!! 🙂