Scraping the Royal Society membership list

To a data scientist any data is fair game, from my interest in the history of science I came across the membership records of the Royal Society from 1660 to 2007 which are available as a single PDF file. I’ve scraped the membership list before: the first time around I wrote a C# application which parsed a plain text file which I had made from the original PDF using an online converting service, looking back at the code it is fiendishly complicated and cluttered by boilerplate code required to build a GUI. ScraperWiki includes a pdftoxml function so I thought I’d see if this would make the process of parsing easier, and compare the ScraperWiki experience more widely with my earlier scraper.

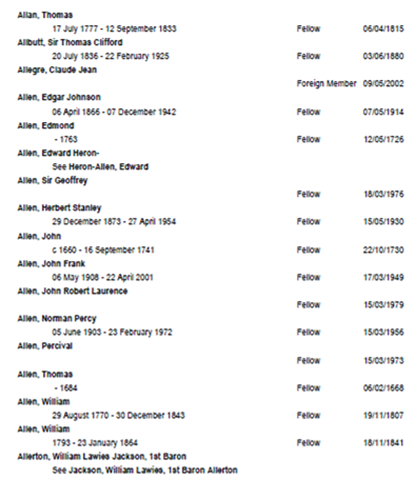

The membership list is laid out quite simply, as shown in the image below, each member (or Fellow) record spans two lines with the member name in the left most column on the first line and information on their birth date and the day they died, the class of their Fellowship and their election date on the second line.

Later in the document we find that information on the Presidents of the Royal Society is found on the same line as the Fellow name and that Royal Patrons are formatted a little differently. There are also alias records where the second line points to the primary record for the name on the first line.

pdftoxml converts a PDF into an xml file, wherein each piece of text is located on the page using spatial coordinates, an individual line looks like this:

<text top="243" left="135" width="221" height="14" font="2">Abbot, Charles, 1st Baron Colchester </text>

This makes parsing columnar data straightforward you simply need to select elements with particular values of the “left” attribute. It turns out that the columns are not in exactly the same positions throughout the whole document, which appears to have been constructed by tacking together the membership list A-J with that of K-Z, but this can easily be resolved by accepting a small range of positions for each column.

Attempting to automatically parse all 395 pages of the document reveals some transcription errors: one Fellow was apparently elected on 16th March 197 – a bit of Googling reveals that the real date is 16th March 1978. Another fellow is classed as a “Felllow”, and whilst most of the dates of birth and death are separated by a dash some are separated by an en dash which as far as the code is concerned is something completely different and so on. In my earlier iteration I missed some of these quirks or fixed them by editing the converted text file. These variations suggest that the source document was typed manually rather than being output from a pre-existing database. Since I couldn’t edit the source document I was obliged to code around these quirks.

ScraperWiki helpfully makes putting data into a SQLite database the simplest option for a scraper. My handling of dates in this version of the scraper is a little unsatisfactory: presidential terms are described in terms of a start and end year but are rendered 1st January of those years in the database. Furthermore, in historical documents dates may not be known accurately so someone may have a birth date described as “circa 1782” or “c 1782”, even more vaguely they may be described as having “flourished 1663-1778” or “fl. 1663-1778”. Python’s default datetime module does not capture this subtlety and if it did the database used to store dates would need to support it too to be useful – I’ve addressed this by storing the original life span data as text so that it can be analysed should the need arise. Storing dates as proper dates in the database, rather than text strings means we can query the database using date based queries.

ScraperWiki provides an API to my dataset so that I can query it using SQL, and since it is public anyone else can do this too. So, for example, it’s easy to write queries that tell you the the database contains 8019 Fellows, 56 Presidents, 387 born before 1700, 3657 with no birth date, 2360 with no death date, 204 “flourished”, 450 have birth dates “circa” some year.

I can count the number of classes of fellows:

select distinct class,count(*) from `RoyalSocietyFellows` group by class

Make a table of all of the Presidents of the Royal Society

select * from `RoyalSocietyFellows` where StartPresident not null order by StartPresident desc

…and so on. These illustrations just use the ScraperWiki htmltable export option to display the data as a table but equally I could use similar queries to pull data into a visualisation.

Comparing this to my earlier experience, the benefits of using ScraperWiki are:

- Nice traceable code to provide a provenance for the dataset;

- Access to the pdftoxml library;

- Strong encouragement to “do the right thing” and put the data into a database;

- Publication of the data;

- A simple API giving access to the data for reuse by all.

My next target for ScraperWiki may well be the membership lists for the French Academie des Sciences, a task which proved too complex for a simple plain text scraper…

Hi Ian,

We’ve now added the data to a Google Spreadsheet and aim to keep it up to date as new Fellows are elected and others pass away:

https://docs.google.com/spreadsheet/ccc?key=0AmIblj8F2r_GdG9aaFZRMjNrYUZXVHkzeXRzdmhFTmc&usp=sharing

I hope this is a bit easier for you to work from. If you’d like us to present any other data in more convenient formats then please do let us know at webmanager /at/ royalsociety dot org.

Francis Bacon

(Digital Communications Editor, The Royal Society)

Hi Francis

thanks – that’s rather nice – I shall communicate it to the history of science community! I’ll have to do more to provide added value 🙂

best regards

Ian