From future import x.scraperwiki.com

Time flies when you’re building a platform.

At the start of the year, we announced the beginnings of a new, more powerful, more flexible ScraperWiki. More powerful because it exposes industry standards like SQL, SSH, and a persistent filesystem to developers, so they can scrape and crunch and export data pretty much however they like. More flexible because, at its heart, the new ScraperWiki is an ecosystem of end-user tools, enabling domain experts, managers, journalists, kittens to work with data without writing a line of code.

At the time, we were happy to announce all of our corporate Data Services customers were happily using the new platform (admittedly, with a few rough edges!). Lots has changed since then (seriously – take a look at the code!) and we’ve learnt a lot about how users from all sorts of different backgrounds expect to see, collect and interact with their data. As a guy with UX roots, this stuff is fascinating – perhaps something for future blogs posts!

Anyway, back to the future…

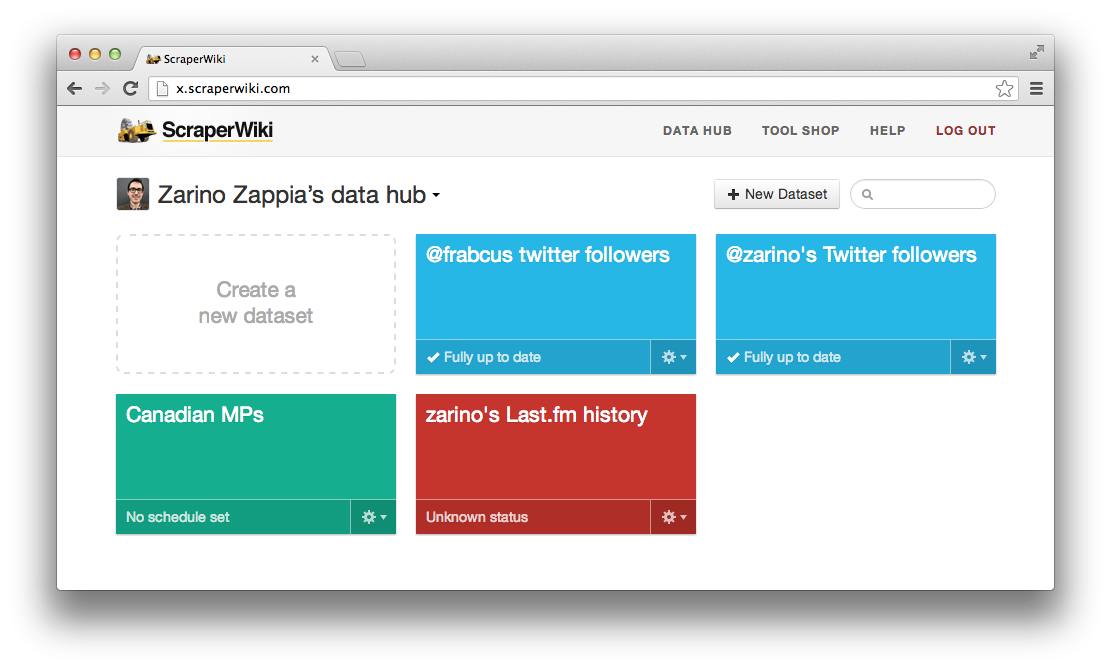

Last week, we invited our ‘Premium Account’ holders from ScraperWiki Classic, to come try the new ScraperWiki out. Each of them had their own private data hub, pre-installed with all of their Classic scrapers and views. And they all have access to a basic suite of tools for importing, visualising and exporting data (but there are far more to come).

The feedback we’ve had so far has been really positive, so I wanted to say a big public thank you to everyone in this first tranche of users – you awesome, data-wrangling trail-blazers, you.

But we’re not standing still. Since our December announcement, we’ve collated a shortlist of early adopters: people who are pushing the boundary of what Classic can offer, or who have expressed interest in the new platform on our blog, mailing list, or Twitter. And once we’ve made some improvements, and put the finishing touches on our first set of end-user tools, we’ll be inviting them to put new ScraperWiki to the test.

If you’d like to be part of that early adopter shortlist, leave a comment below, or email new@scraperwiki.com. We’d love to have you on board.

Hi, I tried sending a mail to new@, but it bounced back to me with a message from google groups.

I’m wondering what it takes to get an invite to new Scraperwiki?

Also, I’d like to know there’s any documentation for developers and

potential contributors, such as overview of architectural changes

since older Scraperwiki (they seem huge).

Thank you!

Hi,

My name is Juan Elosua and I’m working as a freelance in data analysis and data visualization.

In Spain, where I am based, we don’t yet have a FOIA law so scraping is one of our main issues when starting a data project….I am used to write ruby code with mechanize and nokogiri but I have also used python with beautiful soup to build my scrapers.

I have trained some newsrooms in order to be able to do some scraping but the coding barrier is quite high for them, I will love to be able to test the new scraperwiki to see if this will be an option for them in the near future.

Please accept me as one of your early adopters.

Cheers

Juan

PS: Just to let you know, I’ve tried to send an email to new@scraperwiki.com but It got bounced back as non-existing.

Hola Juan,

¡Muchas gracias por la nota!

We had a bug with the new@scraperwiki.com email address… which I’ve fixed now. Please drop us a line there, and we’ll know you’re interested!

Adios,

-Zach

I’d love to try out the new beta and be on the early adopter shortlist! My username is xclusion, is there any more info you’d need?

Hi, I’ll need your address, but I’ve put together a form to capture that, so if you visit: https://docs.google.com/forms/d/13Yk-Y1yliEmgxewSLvUFjpiFVl8Xb6pJNfnTxZc_gMc/viewform and fill that in, I’ll add you to the list! 🙂