It’s SQL. In a URL.

Squirrelled away amongst the other changes to ScraperWiki’s site redesign, we made substantial improvements to the external API explorer.

We’re going to concentrate on the SQLite function here as it is most import, but as you can see on the right there are other functions for getting out scraper metadata.

Zarino and Julian have made it a little bit slicker to find out the URLs you can use to get your data out of ScraperWiki.

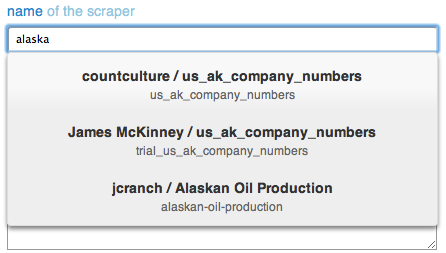

1. As you type into the name field, ScraperWiki now does an incremental search to help you find your scraper, like this.

2. After you select a scraper, it shows you its schema. This makes it much easier to know the names of the tables and columns while writing your query.

![]()

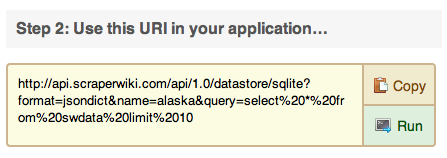

3. When you’ve edited your SQL query, you can run it as before. There’s also now a button to quickly and easily copy the URL that you’ve made for use in your application.

You can get to the explorer with the “Explore with ScraperWiki API” button at the top of every scraper’s page. This makes it quite useful for quick and dirty queries on your data, as well as for finding the URLs for getting data into your own applications.

Let us know when you do something interesting with data you’ve sucked out of ScraperWiki!