For a few years now, people have said “but you don’t just do scraping, and you’re not a wiki, why are you called that?” We’re pleased to announce that we have finally renamed our company. We’re now called The Sensible Code Company. Or just Sensible Code, if you’re friends! We design and sell products that […]

Remote working at ScraperWiki

We’ve just posted our first job advert for a remote worker. Take a look, especially if you’re a programmer. Throughout ScraperWiki’s history, we’ve had some staff working remotely – one for a while as far away as New York! Sometimes staff have had to move away for other reasons, and have continued to work for […]

QuickCode is the new name for ScraperWiki (the product)

Our original browser coding product, ScraperWiki, is being reborn. We’re pleased to announce it is now called QuickCode. We’ve found that the most popular use for QuickCode is to increase coding skills in numerate staff, while solving operational data problems. What does that mean? I’ll give two examples. Department for Communities and Local Government run […]

Learning to code bots at ONS

The Office for National Statistics releases over 600 national statistics every year. They came to ScraperWiki to help improve their backend processing, so they could build a more usable web interface for people to download data. We created an on-premises environment where their numerate staff learnt a minimal amount of coding, and now create short […]

Running a code club at DCLG

The Department for Communities and Local Government (DCLG) has to track activity across more than 500 local authorities and countless other agencies. They needed a better way to handle this diversity and complexity of data, so decided to use ScraperWiki to run a club to train staff to code. Martin Waudby, data specialist, said: I […]

Highlights of 3 years of making an AI newsreader

We’ve spent three years working on a research and commercialisation project making natural language processing software to reconstruct chains of events from news stories, representing them as linked data. If you haven’t heard of Newsreader before, our one year in blog post is a good place to start. We recently had our final meeting in […]

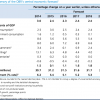

Saving time with GOV.UK design standards

While building the Civil Service People Survey (CSPS) site, ScraperWiki had to deal with the complexities of suppressing data to avoid privacy leaks and making technology to process tens of millions of rows in a fraction of a second. We didn’t also have time to spend on basic web design. Luckily the Government’s Resources for designers, […]

6 lessons from sharing humanitarian data

This post is a write-up of the talk I gave at Strata London in May 2015 called “Sharing humanitarian data at the United Nations“. You can find the slides on that page. The Humanitarian Data Exchange (HDX) is an unusual data hub. It’s made by the UN, and is successfully used by agencies, NGOs, companies, […]

Over a billion public PDFs

You can get a guesstimate for the number of PDFs in the world by searching for filetype:pdf on a web search engine. These are the results I got in August 2015 – follow the links to see for yourself. Google Bing Number of PDFs 1.8 billion 84 million Number of Excel files 14 million 6 […]

We’re hiring! Technical Architect

We’ve lots of interesting projects on – with clients like the United Nations and the Cabinet Office, and with our own work building products such as PDFTables.com. Currently we’re after a Technical Architect, full details on our jobs page. We’re a small company, so roles depend on individual people. Get in touch if something doesn’t […]