Big fat aspx pages for thin data

My work is more with the practice of webscraping, and less in the high-faluting business plans and product-market-fit leaning agility. At the end of the day, someone must have done some actual webscraping — and the harder it is the better.

During the final hours of the Columbia University hack day, I got to work on a corker in the form of the New York State Joint Committee on Public Ethics Lobbying filing system.

This is an aspx website which is truly shocking. The programmer who made it should be fired — except it looks like he probably got it to a visibly working stage, and then simply walked away from the mess he created without finding out why it was running so slowly.

Directions:

1. Start on this page.

2. Click on 2. Client Query – Click here to execute Client Query.

3. Select Registration Year: 2011

4. Click the [Search] button

[ Don’t try to use the browser’s back button as there is a piece of code on the starting page that reads: <script language=”javascript”>history.forward();</script> ]

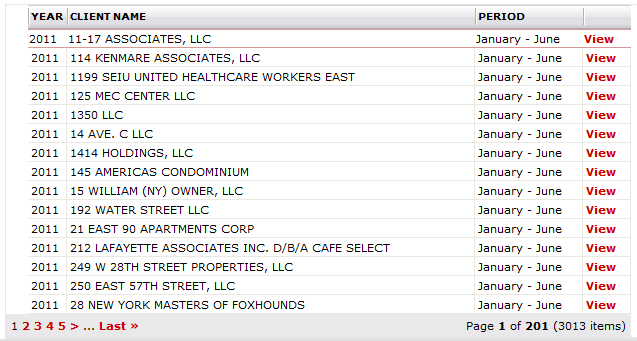

A page called LB_QReports.aspx will be downloaded, which is the same as the previous page, except it is 1.05Mbs long and renders a very small table which looks like this:

If you are able to look at the page source you will find thousands of lines of the form:

<div id="DisplayGrid_0_14_2367">

<a id="DisplayGrid_0_14_2367_ViewBTN"

href="javascript:__doPostBack('DisplayGrid_0_14_2367$ViewBTN','')">View</a>

</div>

Followed by a very long section which begins like:

window.DisplayGrid = new ComponentArt_Grid('DisplayGrid');

DisplayGrid.Data = [[5400,2011,'N','11-17 ASSOCIATES, LLC','11-17

ASSOCIATES, LLC','','11-17 ASSOCIATES, LLC','NEW YORK','NY',10000,

'APR',40805,'January - June','11201',],[6596,2011,'N','114 KENMARE

ASSOCIATES, LLC','114 KENMARE ASSOCIATES, LLC','','114 KENMARE

ASSOCIATES, LLC','NEW YORK','NY',11961,'APR',41521,'January -

June','10012',],[4097,2011,'N','1199 SEIU UNITED HEALTHCARE

WORKERS EAST','1199 SEIU UNITED HEALTHCARE WORKERS EAST','','1199

SEIU UNITED HEALTHCARE WORKERS EAST','NEW YORK','NY',252081,'APR',

40344,'January - June','10036',],...

This DisplayGrid object is thousands of lists long. So although you only get 15 records in the table at a time, your browser has been given the complete set of data at once for the javascript to pagenate.

Great, I thought. This is easy. I simply have to parse out this gigantic array as json and poke it into the database.

Unfortunately, although it can be interpreted by the javascript machine, it’s not valid json. The quotes are of the wrong type, there are trailing commas, and we need to deal with the escaped apostrophes.

mtable = re.search("(?s)DisplayGrid.Data =s*([[.*?]])", html)

jtable = mtable.group(1)

jtable = jtable.replace("\'", ";;;APOS;;;")

jtable = jtable.replace("'", '"')

jtable = jtable.replace(";;;APOS;;;", "'")

jtable = jtable.replace(",]", "]")

jdata = json.loads(jtable)

Then it’s a matter of working out the headers of the table and storing it into the database.

(Un)Fortunately, there’s more data about the lobbying disclosure than is present in this table if you click on those View links on each line, such as person names, addresses, amounts of money, and what was lobbied.

If you hover your mouse above one of these links you will see it’s of the form: javascript:__doPostBack(‘DisplayGrid_0_14_2134$ViewBTN’,”).

At this point it’s worth a recap on how to get along with an asp webpage, because that is what this is.

[The scraper I am working on is ny_state_lobby, if you want to take a look.]

Here is the code for getting this far, to the point where we can click on these View links:

cj = mechanize.CookieJar()

br = mechanize.Browser()

br.addheaders = [('User-agent', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.1) Gecko/2008071615 Fedora/3.0.1-1.fc9 Firefox/3.0.1')]

br.set_cookiejar(cj)

# get to the form (with its cookies)

response = br.open("https://apps.jcope.ny.gov/lrr/Menu_reports_public.aspx")

for a in br.links():

if a.text == 'Click here to execute Client Query':

link = a

response = br.follow_link(link)

# fill in the form

br.select_form("form1")

br["ddlQYear"] = ["2011"]

response = br.submit()

print response.read() # this gives massive sets of data

br.select_form("form1")

br.set_all_readonly(False)

The way to do those clicks onto “DisplayGrid_0_14_%d$ViewBTN” (View) buttons is with the following function that does the appropriate __doPostBack action.

def GetLobbyGrid(d, year):

dt = 'DisplayGrid_0_14_%d$ViewBTN' % d

br["__EVENTTARGET"] = dt

br["__EVENTARGUMENT"] = ''

br.find_control("btnSearch").disabled = True

request = br.click()

response1 = br1.open(request)

print response1.read()

…And you will find you will have got exactly the same page as before — including that 1Mb fake json data blob.

Except it’s not quite exactly the same. There is a tiny new little section of javascript in the page, right at the bottom. (I believe I discovered it by watching the network traffic on the browser when following the link.)

<script language=javascript>var myWin;myWin=window.open( 'LB_HtmlCSR.aspx?x=EOv...QOn','test','width=900,height=450,toolbar =no,titlebar=no,location=center,directories=no, status=no,menubar= yes,scrollbars=yes,resizable=yes');myWin.focus();</script>

This contains the secret new link you have to click on to get the lobbyist information.

html1 = response1.read()

root1 = lxml.html.fromstring(html1)

for s in root1.cssselect("script"):

if s.text:

ms = re.match("var myWin;myWin=window.open('(LB_HtmlCSR.aspx?.*?)',", s.text)

if ms:

loblink = ms.group(1)

uloblink = urlparse.urljoin(br1.geturl(), loblink)

response2 = br1.open(uloblink)

print response2.read() # this is the page you want

So, anyway, that’s where I’m up to. I’ve started the ny_state_lobby_parse scraper to work on these pages, but I don’t have time to carry it on right now (too much blogging).

The scraper itself is going to operate very slowly because for each record it needs to download 1Mb of uselessly generated data to get the individual link to the lobbyist. And I don’t have reliable unique keys for it yet. It’s possible I could make them by associating the button name with the corresponding record from that DisplayGrid table, but that’s for later.

For now I’ve got to go and do other things. But at least we’re a little closer to having the picture of what is being disclosed into this database. The big deal, as always, is finishing it off.

Yes that website was pretty awful. That’s just about what I would expect from a state government website though.