ScraperWiki – Professional Services

How would you go about collecting, structuring and analysing 100,000 reports on Romanian companies?

You could use ScraperWiki to write and host you own computer code that carries out the scraping you need, and then use our other self-service tools to clean and analyse the data.

But sometimes writing your own code is not a feasible solution. Perhaps your organisation does not have the coding skills in the required area. Or maybe an internal team needs support to deploy their own solutions, or lacks the time and resources to get the job done quickly.

That’s why, alongside our new platform, ScraperWiki also offers a professional service specifically tailored to corporate customers’ needs.

Recently, for example, we acquired data from the Romanian Ministry of Finance for a client. Our expert data scientists wrote a computer program to ingest and structure the data, which was fed into the client’s private datahub on ScraperWiki. The client was then able to make use of ScraperWiki’s ecosystem of built-in tools, to explore their data and carry out their analysis…

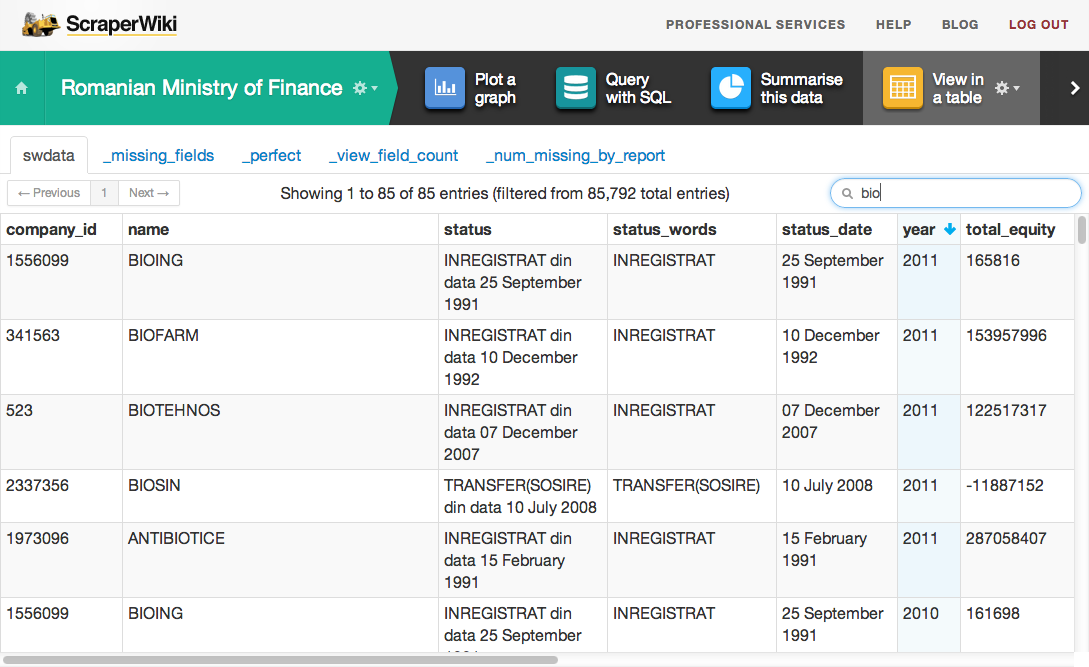

Like the “View in a table” tool, which lets them page through the data, sort it and filter it:

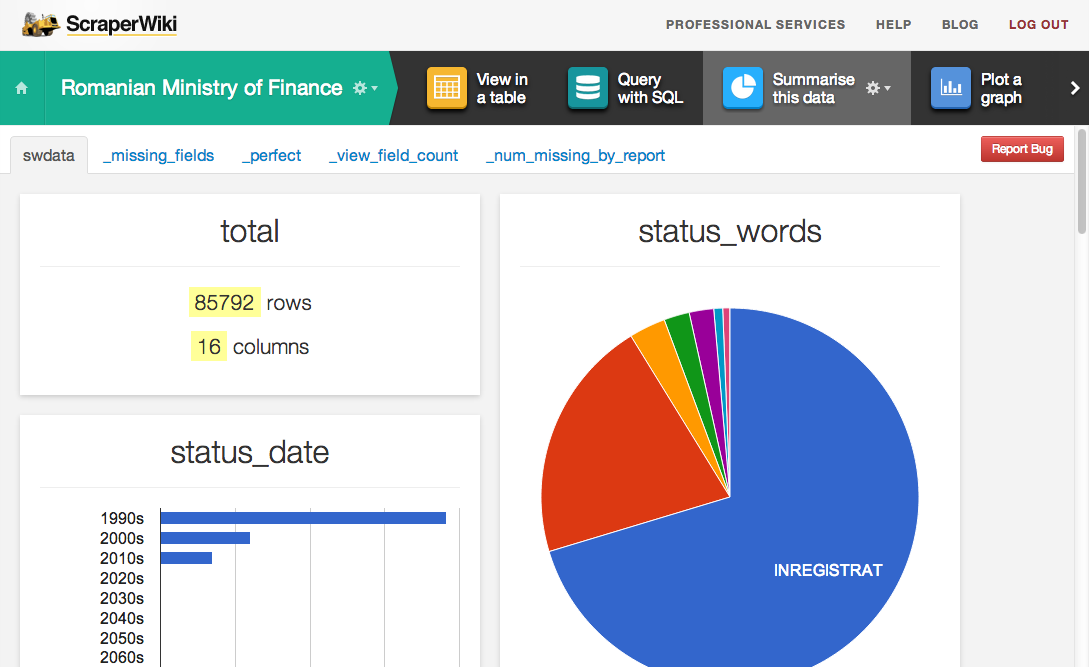

The “Summarise this data” tool which gives them a quick overview of their data by looking at each column of the data and making an intelligent decision as to how best to portray it:

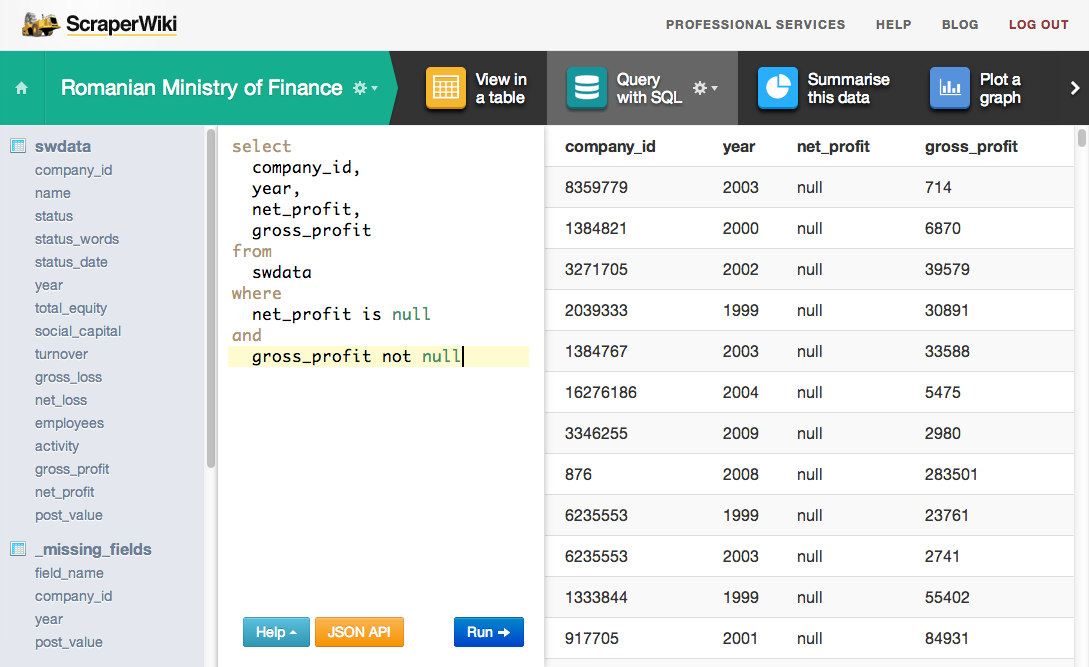

And the “Query with SQL” tool, which allows them to ask sophisticated questions of their data using the industry-standard database query language, and then export the live data to their own systems:

Not only this, ScraperWiki’s data scientists also handcrafted custom visualisation and analysis for the client. In this case we made a web page which pulled in data from the dataset directly, and presented results as a living report. For other projects we have written and presented analysis using R, a very widely used open source statistical analysis package, and Tableau, the industry-leading business intelligence application.

The key advantage of using ScraperWiki for this sort of project is that there is no software to install locally on your computer. Inside corporate environments the desktop PC is often locked down, meaning custom analysis software cannot be easily deployed. Ongoing maintenance presents further problems. Even in more open environments, installing custom software for just one piece of analysis is not something users find convenient. Hosting data analysis functionality on the web has become an entirely practical proposition; storing data on the web has long been commonplace and it is a facility which we use every day. More recently, with developments in browser technology it has become possible to build a rich user experience which facilitates online data analysis delivery.

Combine these technologies with our expert data scientists and you get a winning solution to your data questions – all in the form of ScraperWiki Professional Services.