Hi, I’m Sophie

Hi, my name is Sophie Buckley, I’m an AS student studying Computing, Maths, Physics and Chemistry who’s interested in programming, games, art and reading. I’m also the latest in a line of privileged ScraperWiki interns and although my time here has been tragically short, the ScraperWiki team have done an amazing job in making every second of my experience interesting, informative and enjoyable. 🙂

Hi, my name is Sophie Buckley, I’m an AS student studying Computing, Maths, Physics and Chemistry who’s interested in programming, games, art and reading. I’m also the latest in a line of privileged ScraperWiki interns and although my time here has been tragically short, the ScraperWiki team have done an amazing job in making every second of my experience interesting, informative and enjoyable. 🙂

One of the first things I learned about ScraperWiki is their use of the XP (Extreme Programming) methodology. On my first day I arrived just in time for ‘stand up’, where every morning the members of the team stand in a circle and share with the rest of us what they did the previous working day, what they intend to do that day and what they’re hoping to achieve. Doing this every morning was a bit nerve wracking because I haven’t got the best memory in the world, but Zarino (who’d been assigned the role of looking after me for the week) was always there to give me a helping hand and go first.

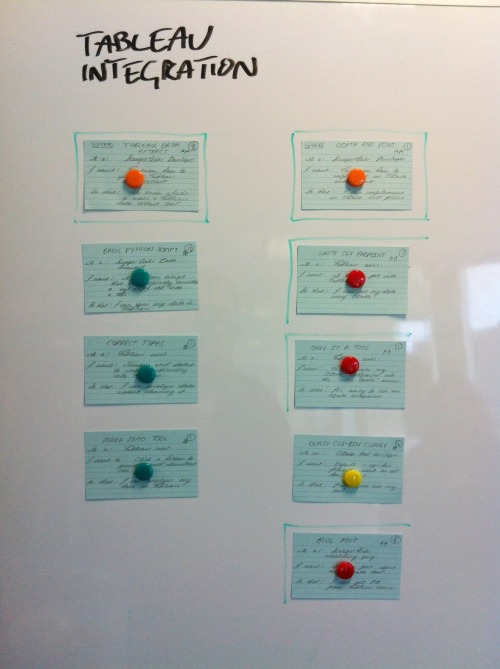

He also showed me how they use cards to track and estimate the logical steps that were needed to complete tasks, and how they investigated possible routes with the use of ‘spike’ cards. The time taken to complete a ‘spike’ isn’t estimated, it’s artificially time-boxed (usually to ½ or 1 day). The purpose of a spike is to explore a possible route and find out whether it’s the best one, without having to commit to it.

Zarino had set aside the week of my internship so that both of us could work on a new feature: An automatic way to get data out of ScraperWiki and into Tableau. We investigated the options on Monday, and concluded that we had two options: either generate “Tableau Data Extract” files for users to download, or create an “OData” endpoint that could serve up the live data. Both routes were completely unknown, so we wrote a spike card for each of them, to determine which one was best.

Monday and Tuesday consisted of trying to make a TDE file, and during this time I used Vim, SSH, Git, participated in pair programming, was introduced to CoffeeScript (which I really enjoyed using) and was also shown how to write unit tests for what we had written.

On Wednesday we decided to look further into the OData endpoint, and for the rest of the week I learned more about Atom/XML, wrote a Python ‘Flask’ app, and built a user interface with HTML and JavaScript.

One of the great things about ScraperWiki is the friendly nature of everybody who works here. Other members of the team were willing to help where they could and were more than happy to share with me what they were working on when I was curious. They were genuinely interested in me and my studies, and were kind enough to share with me their experiences, which meant that every tea break (tea being in abundance in the ScraperWiki office!) and lunch was never awkwardly spent with people you barely knew.

The guys at ScraperWiki like to do stuff outside the office too, and on Tuesday I was invited to see Her in FACT, which was definitely one of the highlights of the week! Other highlights included the awesome burgers at FSK and Ian’s ecstatic reaction when our spike magically piped his data in Tableau.

Overall, I’m so glad that I took those hesitant first steps in trying to become an intern at ScraperWiki by emailing Francis all those months ago; this has truly been an amazing week and I’m so grateful to everyone (especially Zarino!) for teaching me so much and putting up with me!

If you’d like to hear more from me and keep up with what I’m doing then you can check out my twitter page or you can email me. 🙂