ScraperWiki: A story about two boys, web scraping and a worm

“It’s like a buddy movie.” she said.

Not quite the kind of story lead I’m used to. But what do you expect if you employ journalists in a tech startup?

“Tell them about that computer game of his that you bought with your pocket money.”

She means the one with the risqué name.

I think I’d rather tell you about screen scraping, and why it is fundamental to the nature of data.

About how Julian spent almost a decade scraping himself to death until deciding to step back out and build a tool to make it easier.

I’ll give one example.

Two boys

In 2003, Julian wanted to know how his MP had voted on the Iraq war.

The lists of votes were there, on the www.parliament.uk website. But buried behind dozens of mouse clicks.

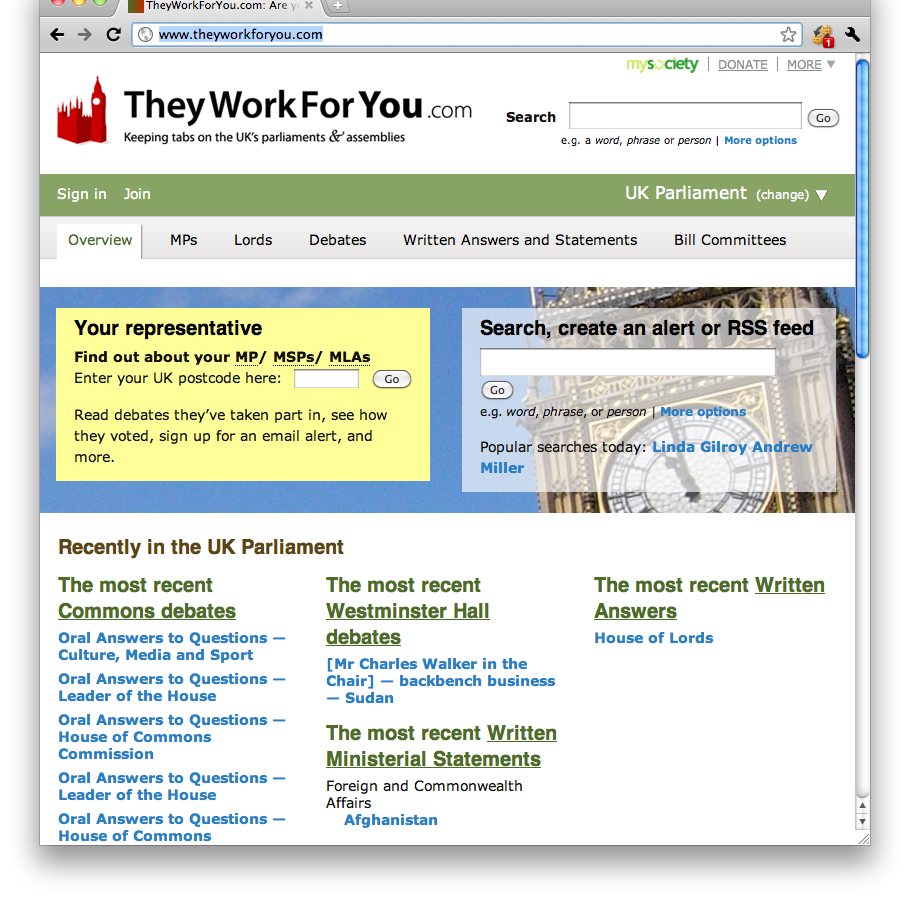

Julian and I wrote some software to read the pages for us, and created what eventually became TheyWorkForYou.

We could slice and dice the votes, mix them with some knowledge from political anaroks, and create simple sentences. Mini computer generated stories.

“Louise Ellman voted very strongly for the Iraq war.”

You can see it, and other stories, there now. Try the postcode of the ScraperWiki office, L3 5RF.

I remember the first lobbiest I showed it to. She couldn’t believe it. Decades of work done in an instant by a computer. An encyclopedia of data there in a moment.

Web Scraping

It might seem like a trick at first, as if it was special to Parliament. But actually, everyone does this kind of thing.

Google search is just a giant screen scraper, with one secret sauce algorithm guessing its ranking data.

Facebook uses scraping as a core part of its viral growth to let users easily import their email address book.

There’s lots of messy data in the world. Talk to a geek or a tech company, and you’ll find a screen scraper somewhere.

Why is this?

It’s Tautology

On the surface, screen scrapers look just like devices to work round incomplete IT systems.

Parliament used to publish quite rough HTML, and certainly had no database of MP voting records. So yes, scrapers are partly a clever trick to get round that.

But even if Parliament had published it in a structured format, their publishing would never have been quite right for what we wanted to do.

We still would have had to write a data loader (search for ‘ETL’ to see what a big industry that is). We still would have had to refine the data, linking to other datasets we used about MPs. We still would have had to validate it, like when we found the dead MP who voted.

It would have needed quite a bit of programming, that would have looked very much like a screen scraper.

And then, of course, we still would have had to build the application, connecting the data to the code that delivered the tool that millions of wonks and citizens use every year.

Core to it all is this: When you’re reusing data for a new purpose, a purpose the original creator didn’t intend, you have to work at it.

Put like that, it’s a tautology.

A journalist doesn’t just want to know what the person who created the data wanted them to know.

Scrape Through

So when Julian asked me to be CEO of ScraperWiki, that’s what went through my head.

Secrets buried everywhere.

The same kind of benefits we found for politics in TheyWorkForYou, but scattered across a hundred countries of public data, buried in a thousand corporate intranets.

If only there was a tool for that.

A Worm

And what about my pocket money?

Nicola was talking about Fat Worm Blows a Sparky.

Julian’s boss’s wife gave it its risqué name while blowing bubbles in the bath. It was 1986. Computers were new. He was 17.

Fat Worm cost me £9.95. I was 12.

![[Loading screen]](http://www.worldofspectrum.org/showscreen.cgi?screen=screens/load/f/gif/FatWormBlowsASparky.gif)

I was on at most £1 a week, so that was ten weeks of savings.

Luckily, the 3D graphics were incomprehensibly good for the mid 1980s. Wonder who the genius programmer is.

I hadn’t met him yet, but it was the start of this story.