Why the Government scraped itself

We wrote last month about Alphagov, the Cabinet Office’s prototype, more usable, central Government website. It made extensive use of ScraperWiki.

The question everyone asks – why was the Government scraping its own sites? Let’s take a look.

The question everyone asks – why was the Government scraping its own sites? Let’s take a look.

In total 56 scrapers were used. You can find them tagged “alphagov” on the ScraperWiki website. There are a few more not yet in use, making 66 in total. They were written by 14 different developers from both inside and outside the Alphagov team – more on that process another day.

The bulk of scrapers were there to migrate and combine content, like transcripts of ministerial speeches and details of government consultations. These were then imported into sections of alpha.gov.uk – speeches are here, and the consultations here.

This is the first time, that I know of, that the Government has organised a cross-government view of speeches and consultations. (Although third parties like TellThemWhatYouThink have covered similar ground before). This is vital to citizens who don’t fall into particular departmental categories, but want to track things based on topics that matter to them.

The rest of the scrapers were there to turn content into datasets. You need a dataset to make something more usable.

Two examples:

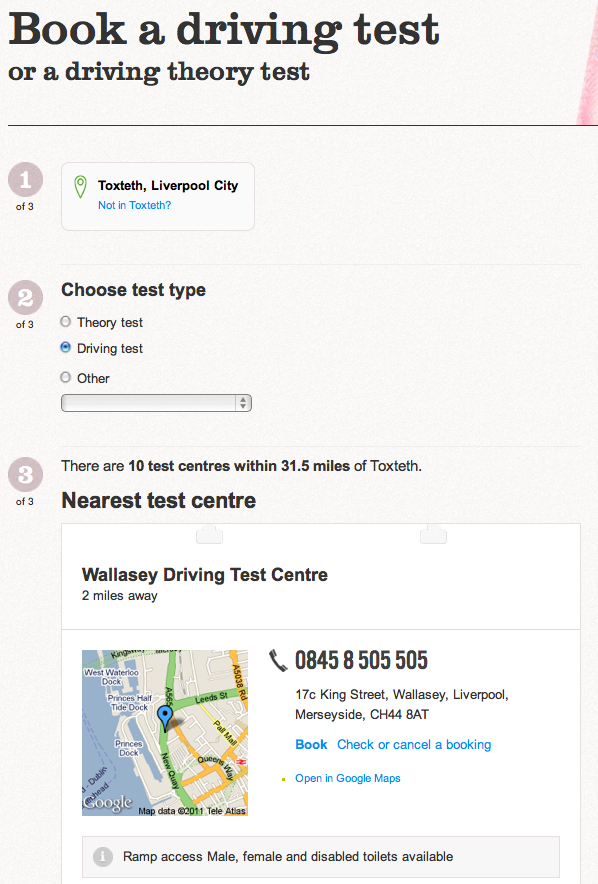

1. The list of DVLA driving test centres has been turned into the beginnings of a simple app to book a driving test. Compare to the original DfT site here.

2. The UK Bank Holiday data that ScraperWiki user Aubergene scraped last year was improved and used for the alpha.gov.uk Bank Holiday page.

It seems strange at first for a Government to scrape its own websites. It isn’t though. It lets them move quickly (agile!), and concentrate first on the important part – making the experience for citizens as good as possible.

And now, thanks to Alphagov using ScraperWiki, you can download and use all the data yourself – or repurpose the scraping scripts for something else.

Let us know if you do something with it!

Tragically, those of us with experience of working on the inside won’t be at all surprised by the notion of government scraping its own websites.

If you are wondering why the government would want to scrape its own sites, I’d suggest high up on the list is the fact that many of the websites are run by third parties as part of an outsourcing agreement, and for a different branch of government to get hold of the underlying data that drives the site (in any kind of useful format) would require some kind of additional contractual agreement to get the outsourcer to give up the data.

More generally, information within government is very much held in silos, and these websites represent examples of that.

Actually most of the functionality on alphagov already exists within Directgov. Public Consultation information across all those organisation has been available for over a year on Directgov (http://consultations.direct.gov.uk/).

Other functions that are on there, such as searching for services by Local Authority are also on there (http://mycouncil.direct.gov.uk/)

What they seem to have done is change/improve the navigation so that it is basically a search of government websites. I think it looks great, but isn’t it just reinventing the wheel?

Thanks for your comment Claire!

I had no idea Directgov had a consultations page like that. Thank you for pointing it out.

It’s a great idea, but unfortunately it has been very badly executed

1. It is very hard to find – it doesn’t appear in Google. For some reason it is deliberately blocked (see http://consultations.direct.gov.uk/robots.txt). This means you have to click several times from a Google search for “government consultations” to get to it, and carefully read the text on two long Directgov pages and pick out the right links. I bet it has very low traffic.

2. It has no interface for browsing. This means if you don’t know anything about Government consultations and you just want to see what kind of thing there is, there is nothing you can do. You can’t even search for an empty string.

3. The searching interface is very inflexible, like an early web interface. These days it could have much clearer presentation, rather than being an upfront overwhelming form. I’m thinking of things like interactive sorting, and nice calendar widgets for choosing date ranges. Lots of details.

4. It has no alerting system – such as by RSS, Email or Twitter. Nor any easy way for anyone to make an alert on top of its content (because it doesn’t appear in Google). Consultations

Fixing all the issues above is exactly the point of Alphagov. I agree that their alpha prototype hasn’t solved everything there, but I at least have some hope that their final version will, if it is commissioned.

I could write a similar detailed criticism of the “Connect to your council” site.

To start you off, when I click on the “Enter details” I have to manually delete “e.g. SE1 or Lambeth” before I can type my own postcode in. It has been fairly standard on the web for more than 10 years to automatically remove such help text when the text box is clicked in.

So yes, Alphagov is in part “reinventing the wheel”. It is building a much better wheel, suited to how people use the web today, and focussing ruthlessly on the details of the experience of individual citizens trying to get something done.

This is quite hard thing to do for a web designer, and quite subtle. But for the citizen used to services from Google and Facebook it is as plain as day. Millions of people are frustrated every day by difficulty in using Government websites.

yes in almost every way alpha gov is reinventing the wheel, but I think it wasn’t a very good wheel to start with. All to often we are keen to move on to the next big thing, when the reality is we haven’t gotten the first thing right. rightly or wrongly direct.gov has become a bit of a beast, it has almost everything, but no one can find anything. Alpha gov is attempting to reverse that. not the easiest thing in the world.

In the same way we redeveloped http://liverpool.gov.uk – we did nothing new , we just concentrated on getting what was already there and available done right.

Agreed, the alphagovuk approach is just rolling the wheel in slightly more webservicey mud. This is not too dreadful and to be expected as incremental innovation is the only way for bureaucratic beasts. There is only so much one can do with front-end eye candy, what needs to change are the organisational processes regarding information and communication flows, replacing the hordes of middlemen/data clerks with intelligent, adaptable & direct tools – the paper trails could get so much more efficient. Anyone else up for a processalphagov? 😉

hmm is the theory the personell and details will change more often then the site structure, that justify the effort of writing scrapers of the gov sites