CIJ Workshop in Blog Form!

Here’s an introduction to a little thing called ScraperWiki. Please watch it so that you don’t develop bad habits that could annoy the programmers you want helping you!

OBJECTIVES FOR THIS WORKSHOP

- Have your own ScraperWiki account and understand all the features and navigation of the site

- Scrape twitter for different terms and export the dataset

- Query datasets on ScraperWiki using SQL and learn about our API

- Create a table from the data in a scraper and understand how views work

- The main objective is to understand ScraperWiki capabilities and potential!

Exercise 0. Make an account

Go to http://scraperwiki.com and make an account. You’ll need this to save your scrapers. We encourage you to use your full name, but it’s not obligatory! Before you start you may like to open a 2nd tab/window in your browser as that will allow you to go back and forth between the blog instructions and the exercise! The exercises are all written using the Python Programming language.

Outcome:

You’ll have your very own ScraperWiki account (lucky you!). Welcome to your dashboard. Please fill out your profile when you have time. You’ll automatically get email updates on how your scrapers are doing. We’ll show you all the features you have, where your data goes and how to schedule your scraper.

The following scrapers you’ll be copying and pasting can be found on my profile page: http://scraperwiki.com/profiles/NicolaHughes/

Exercise 1. Basic data scraping

We’ll start by looking at a scraper that collects results from Twitter. You can use this to store tweets on a topic of your choice into a ScraperWiki database.

1. Edit and run a scraper

- Go to http://scraperwiki.com/scrapers/basic_twitter_scraper_2/.

- Click Fork Scraper. You’ve now created a copy of the scraper. Click Save Scraper located on the right side of the window to save the scraper.

- Position your cursor on Line 11 between the two open speech marks ‘ ‘ – This will allow you to edit the query to a Twitter search term of your choice. For example, if you want to find tweets about an event type in the event hashtag e.g. QUERY=’#cij2011’.

- Click Run and watch the scraper run.

- Save the scraper. Click Save Scraper located on the right side of the window to save the scraper.

2. Download your scraped data

- On the top right-hand corner there are 4 tabs – you are in the Edit (Python) tab, click the Scraper tab. You will see all of your data in tabular format.

- Click on ‘Download spreadsheet (CSV)’ to download and open your data as a spreadsheet. If you have Microsoft Excel or Open Office you can analyse that data using standard spreadsheet functions.

- Double-click on your spreadsheet to open it.

Outcome:

Congratulations! You have created your very own twitter scraper (*applause*) that doesn’t depend on the twitchy twitter API. You’ve scraped your data and added it to the data store. You’ve also taken the data into a csv file. The scraper is scheduled to run daily and any new data will be added automatically. Check out the video on how to change the schedule.

Exercise 2. Analysing some real-life data

In this exercise, we’ll look at a real-life use of ScraperWiki.

The Press Complaints Commission doesn’t release the details of the complaints in a way that is easy to analyse, and it doesn’t release many statistics about its complaints.

It would be interesting to know which newspaper was the most complained about.

However, as one of our users has scraped the data from the PCC site it can be analysed – and crucially, other people can see the data too, without having to write their own scrapers.

Here is the link: http://scraperwiki.com/scrapers/pcc-decisions-mark-3/

There’s no need to fork it. You can analyse the data from any scraper, not just your own.

As the Open Knowledge Foundation say, ‘The best use of your data is one that someone else will find’.

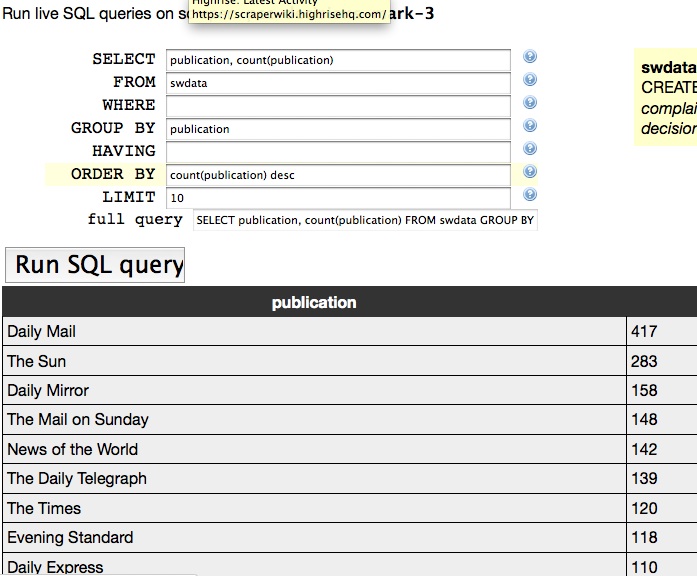

Instead, we are going to create a new view on the data. However we are not going to create a view from the beginning – we will fork a view that has already been created! Go to http://scraperwiki.com/views/live_sql_query_view_3/, fork the view and save it to your dashboard. You can also change the name of the view by clicking on the top line beside your name “Live SQL Query View” and change it to “My analysis of the PCC Data”. Save it again by clicking ‘Save’. Take a few moments to study the table and pay particular attention to the column headings.

- There are four tabs on the top right hand corner of the screen – click the ‘View’ tab. This will take you to the ScraperWiki test card. Click the centre of the test card “Click to open this view”.

- Using this SQL query view, find out which publications get the most complaints.

- Place the cursor in the ‘SELECT’ box, delete the ‘*’ and click on the word ‘publication’ which appears on the 2nd line of the yellow box (tip: the yellow box contains all of the column headings in the table) to the right of the column, and is positioned on the line under swdata . This will transfer the word ‘publication’ into the your SELECT box. Position your cursor to the right of the word ‘publication’ and type in ‘, count(publication)’. This creates a column that contains the a count of the number of times a publication appears in the dataset that was created from by the original scraper.

- Place your cursor in the ‘GROUP BY’ box, and repeat the process above to select the word ‘publication’ from the yellow box to the right. The GROUP BY statement will group together the publications and so give you the aggregated number of times each publication has appeared in the PCC.

- Place your cursor in the ‘ORDER BY’ box, remove the existing text and then type ‘count(publication) desc’. The ORDER BY keyword is used to sort the result-set. This will put the result-set in order of how many times the publication has received a complaint with the most complained about publication appearing at the top.

- In the ‘LIMIT’ box type ‘10’ to see the top 10.

- Hit the ‘Run SQL query’ button and see your results. At the bottom of the column the last item is ‘full query’ – yours should read as follows: (it may be truncated)

SELECT publication, count(publication) FROM swdata GROUP BY publication ORDER BY count(publication) DESC LIMIT 10

It should look like this!

This is a simple query showing you a quick result. The query is not being saved. You can use the Back and Forward browser tabs to see both screens however as soon as you go back to the test card and “Click to see view’ – the query will be reset.

For some really great SQL tutorials check out: http://www.w3schools.com/sql/default.asp

Your Challenge:

Now lets find out who has been making the most complaints – not receiving them. You will be altering the query to find out which complainants have made the most complaints.

Outcome:

So you have just learned a bit about SQL, which is very simple and very powerful. You can query any open data set in ScraperWiki to find the story. Welcome to the wonderful realm of data journalism. Hop aboard our digger and explore.

Exercise 3. Making views out of data

Making a view is a way to present raw data within ScraperWiki. It allows you to do things with the data like analyse it, map it, or create some other way of showing the data.

For example, in the previous exercise we used the view to create a simple SQL Query. The view produced a table but it did not save the results of the query. In this exercise we are going to make a table that gives you the latest result of your query every time you open it up! In effect you will be creating your own live league table.

- Fork and save to your dashboard: http://scraperwiki.com/views/top_10_receivers_of_cabinet_office_money/

- On line 59 change “cabinet_office_spend_data” to “pcc-decisions-mark-3”. This is re-directing the view code to the original PCC scraper.

- On line 60 change the var sqlselect = “ SELECT publication, count(publication) FROM swdata GROUP BY publication ORDER BY count(publication) DESC LIMIT 10 ” query to the SQL query you worked out in the previous exercise.

- Click the ‘PREVIEW’ button which is positioned to the right of the orange documentation button above the ‘console’ window to make sure you’re getting the table you want.

- Click the X on the top right hand corner of the View Preview Window.

- The Heading still refers to the old scraper! Go to the edit view and click on line 21 and replace the old title with ‘Top Complaints by Publication to the PCC’

- Save the View and Preview.

- Go to line 18 and replace “http://scraperwiki.com/scrapers/cabinet_office_spend_data/”>Cabinet Office Spend Data</a> with “http://scraperwiki.com/scrapers/pcc-decisions-mark-3/”>Press Complaints Commission Data</a>. You can also change the ‘this page’ hyperlink from <a href=”http://www.cabinetoffice.gov.uk/resource-library/cabinet-office-spend-data”> to <a href=”http://www.pcc.org.uk/”>.

- Save the view and preview.

- Click View to return to the test card and scroll to the bottom of the screen where you will see the paragraph heading – ‘Add this view to your web site’. You could copy the code and add it to your web site and have the data linked directly. If you don’t have a site, this view is where you can return to get your live league table that will update with your data (wow)!

Note: there is no data stored in a view, only a link to a scraper that is looking at the data in the data store. This is why you can quickly and easily alter a view to look at another scraper at any time. So you van build a viewer once and use it many times.

Check out what the Media Standards Trust made from our PCC scrapers! They made the Unofficial PCC.

Your Challenge:

Build a table of the top 10 receivers of Cabinet Office Money! The original scraper here: http://scraperwiki.com/scrapers/cabinet_office_spend_data/.

So go to your SQL viewer and change the scraper name to “cabinet_office_spend_data” i.e. the name of the scraper for our internal API is the string of letters after ‘scrapers/’ in the URL of the scraper.

Create your query (Hint: you’ll want to look at the ‘Refined’ table as that has the names of the suppliers cleaned to be the same for each spelling, just hit the word ‘Refined’ and the table that is selected for your query will appear in yellow. You’ll also want to use ‘sum’ instead of ‘count’ to sum up the money not how many times the supplier got paid). Then make your table view.

If you want to keep your PCC table just fork it, change the name in the title, save it and change the code to look at “cabinet_office_spend_data” and your query.

Hint: the original table you forked will have the answers!

Also check out this view of the same data set: http://scraperwikiviews.com/run/cabinet_spending_word_cloud_date_slider/

And this view of HMRC spending data that was made in a couple of hours from forking my code: http://scraperwikiviews.com/run/hmrc_spending_pie_chart_date_slider/

Outcome:

You’ll have a live league table that feeds off your scraped data! You’ll see how our setup allows you to constantly view changing data on the web and tell you the stories as they are developing. So you can get data out but you can keep it in and have lots of different views interrogating your data within ScraperWiki.

So let’s work on the journalism in ‘data journalism’ and not just the data. It’s not what can do for your data; it’s what your data can do for you!

So let’s start scraping with ScraperWiki!

Note: Answers in screencast form will be put on the blog in two weeks’ time. So no excuses!

Thanks guys!

Just what I needed for a rainy day. I wanted for a long to get started with Scarperwiki and with this workshop, I know how to start.

Another pretty comprehensive SQL tutorial is at http://www.1keydata.com/sql/sql.html