New ScraperWiki tool lets you extract data from reports with complete accuracy

It’s not always possible to automate data gathering, even with scrapers.

Often we find customers want to regularly update data in ScraperWiki via spreadsheets.

Either they’ve made the spreadsheets via a report from another system (typically one that isn’t on the web), or they gather the data by hand (for example, by phoning someone up every day) and already type it into a spreadsheet.

Today we’re pleased to launch a new Extract Reports tool.

We developed it for the UK Government, and like the ScraperWiki platform it’s open source.

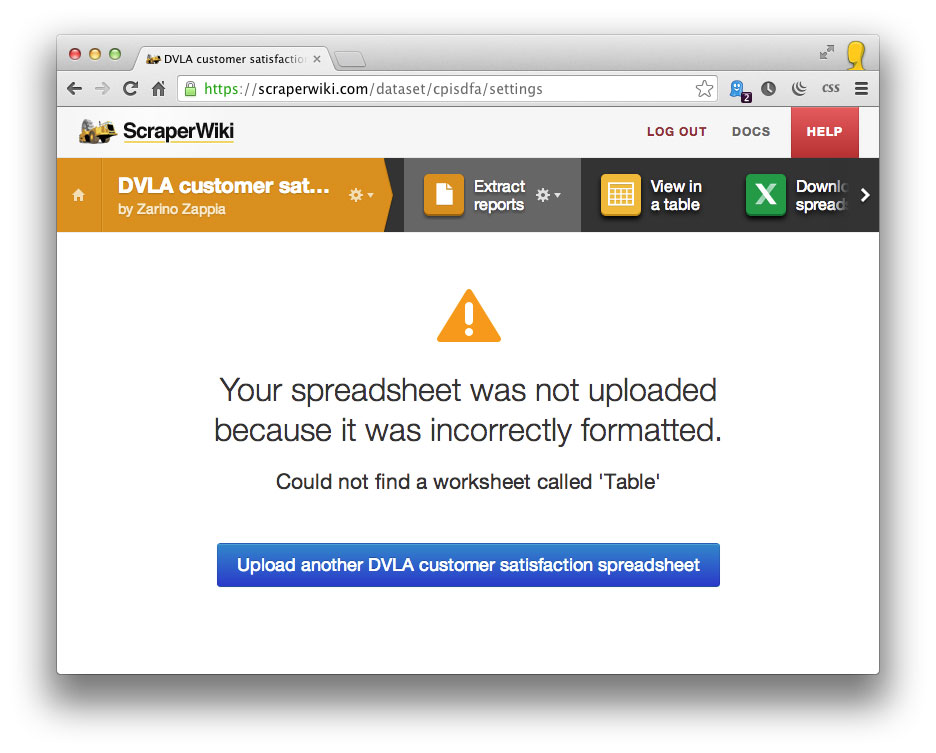

It’s important the person who is uploading the spreadsheet (who often isn’t technical) gets clear and simple error messages if it is misformatted.

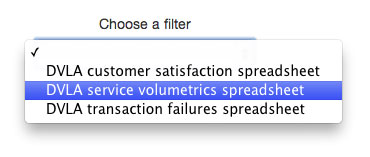

Each type of spreadsheet is configured by a custom filter. These are short pieces of Python code which pull out the data and save it. This makes sure they find the data accurately, and can validate it as thoroughly as possible.

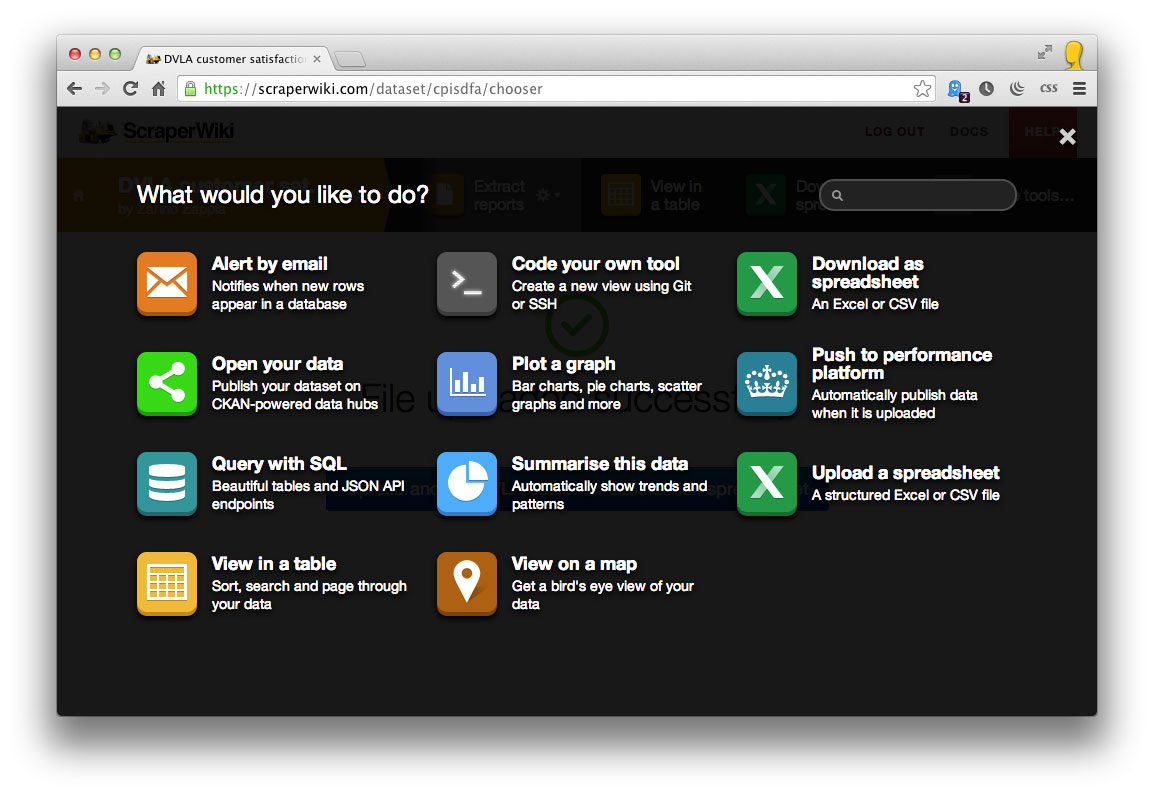

Once the data is loaded, just like any other data set it can be used with other tools. For example, to visualize in Tableau or upload to CKAN.

The tool is available to our corporate customers, so do get in touch.

P.S. Of course, since we’re wicked good at PDFs as well, the tool can also be used to upload reports in PDFs unchanged.

Trackbacks/Pingbacks

[…] It’s not always possible to automate data gathering, even with scrapers.Often we find customers want to regularly update data in ScraperWiki via spreadsheets. […]