NewsReader – one year on

ScraperWiki has been contributing to NewsReader, an EU FP7 project, for over a year now. In that time, we’ve discovered that all the TechCrunch articles would make a pile 4 metres high, and that’s just one relatively small site. The total volume of news published everyday is enormous but the tools we use to process it are still surprisingly crude.

ScraperWiki has been contributing to NewsReader, an EU FP7 project, for over a year now. In that time, we’ve discovered that all the TechCrunch articles would make a pile 4 metres high, and that’s just one relatively small site. The total volume of news published everyday is enormous but the tools we use to process it are still surprisingly crude.

NewsReader aims to develop natural language processing technology to make sense of large streams of news data; to convert a myriad of mentions of events across many news articles into a network of actual events, thus making the news analysable. In NewsReader the underlying events and actors are called “instances”, whilst the mentions of those events in news articles are called… “mentions”. Many mentions can refer to one instance. This is key to the new technology that NewsReader is developing: condensing down millions of news articles into a network representing the original events.

Our partners

The project has 6 member organisations: the VU University Amsterdam, the University of the Basque country (EHU) and the Fondazione Bruno Kessler (FBK) in Trento are academic groups working in computational lingusitics. Lexis Nexis is a major portal for electronically distributed legal and news information, SynerScope is a Dutch startup specialising in visualising large volumes of information, and then there’s ScraperWiki. Our relevance to the project is our background in data journalism and scraping to obtain news information.

ScraperWiki’s role in the project is to provide a mechanism for users of the system to acquire news to process with the technology, to help with the system architecture, open sourcing of the software developed and to foster the exploitation of the work beyond the project. As part of this we will be running a Hack Day in London in the early summer which will allow participants to access the NewsReader technology.

Extract News

Over the last year we’ve played a full part in the project. We were involved mainly in Work Package 01 – User Requirements which meant scoping out large news-related datasets on the web, surveying the types of applications that decision makers used in analysing the news, and proposing the end user and developer requirements for the NewsReader system. Alongside this we’ve been participating in meetings across Europe and learning about natural language processing and the semantic web.

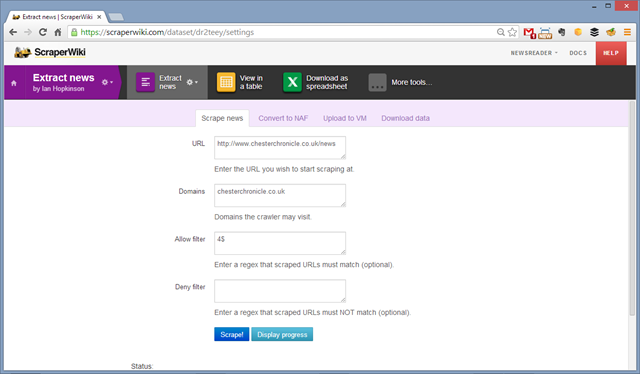

Our practical contribution has been to build an “Extract News” tool. We wanted to provide a means for users to feed articles of interest into the NewsReader system. Extract News is a web crawler which scans a website and converts any news-like content into News Annotation Format (NAF) files which are then processed using the NewsReader natural language processing pipeline. NAF is an XML format which the modules of the pipeline use to both receive input and modify as output. We are running our own instance of the processing virtual machine on Amazon AWS. NAF files contain the content and metadata of individual news articles. Once they have passed through the natural language processing pipeline they are collectively processed to extract semantic information which is then fed into a specialised semantic KnowledgeStore, developed at FBK.

The more complex parts of our News Extract tool are in selecting the “news” part of a potentially messy web page, and providing a user interface suited to monitoring long running processes. At the moment we need to hand code the extraction of each article’s publication date and author on a “once per website” basis – we hope to automate this process in future.

We’ve been trying out News Extract on our local newspapers – the Chester Chronicle and the Liverpool Echo. It’s perhaps surprising how big these relatively small news sites are – approximately 100,000 pages – which takes several days to scrape. If you wanted to collect news on an ongoing basis then you’d probably consume RSS feeds.

What are other team members doing?

Lexis Nexis have been sourcing licensed news articles for use in our test scenarios and preparing their own systems to make use of the NewsReader data. VU University Amsterdam have been developing the English language processing pipeline and the technology for reducing mentions to instances. EHU are working on scaling the NewsReader system architecture to process millions of documents. FBK have been building the KnowledgeStore – a data store based on Big Data technologies for storing, and providing an interface, to the semantic data that the natural language processing adds to the news. Finally, SynerScope have been using their hierarchical edge-bundling technology and expertise in the semantic web to build high performance visualisations of the data generated by NewsReader.

The team has been busy since the New Year preparing for our first year review by the funding body in Luxembourg: an exercise so serious that we had a full dress rehearsal prior to the actual review!

As our project leader said this morning:

We had the best project review I ever had in my career!!!!!!!

Learn More

We have announced details on our event happening on June 10th – please click here

If you’re interested in learning more about NewsReader, our News Extract tool, or the London Hack Day, then please get in touch!

Trackbacks/Pingbacks

[…] Our project partner ScraperWiki keeps a blog and recently team member Ian Hopkinson took the time to write up an overview of the project and its first year. Click through for his post ‘NewsReader – one year on‘. […]