Scraped Data Something to Tweet About

I’m a coding pleb, or The Scraper’s Apprentice as I like to call myself. But I realise that’s no excuse, as many of the ScraperWiki users I talk to have not had formal coding lessons themselves. Indeed, some of our founders aren’t formally trained (we have a doctorate in Chemistry here!).

I’ve been attempting to scrape using the ScraperWiki editor (Lord knows I wouldn’t know where to start otherwise) and the most obvious place to start is the CSV plains of government data. So I parked my ScraperWiki digger outside the Cabinet Office. I began by scraping Special Advisers’ gifts and hospitality and then moved onto Ministers’ meetings as well as gifts and hospitality and also Permanent Secretaries (under the strict guidance of my scraper guru, The Julian). The first things I noticed was the blatant irregularity of standards and formats. Some fields were left blank assuming the previous entry was implied. And dates! Not even Goolge Refine could work out what was going on there.

As much as this was an exercise in scraping, I wanted to do something with it. I wanted to repurpose the data. I wrote some blog posts after looking through some of the datasets but the whole point of liberating data, especially government data, is to make it democratic. I figured that each line of data had the potential to be a story, I just didn’t know it. Chris Taggart said that data helps you find the part of the haystack the needle is in and not just the needle itself. So if I scatter that part of the haystack someone else might find the needle.

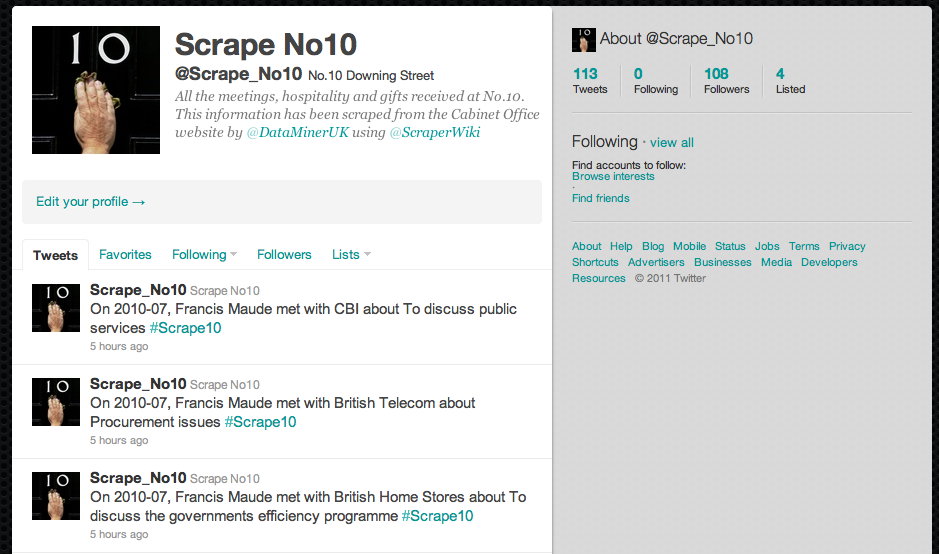

So with the help of Ross and Tom, I set up a Scrape_No10 Twitter account to to tweet out the meetings, gifts and hospitalities at No.10 in chronological order (hint: for completely erratic datetime formats code it all to be lexigraphical text e.g. 2010-09-14 and you can order it ok). The idea being that not only is each row of data in the public domain but I can fix the hashtag (#Scrape10). I’ve set the account to tweet out 3 tweets every 3 hours. That way a single tweet has the potential (depending on how many followers it can attract) to cause the hashtag to trend. And that is the sign that someone somewhere has added an interesting piece of information. In fact, Paul Lewis, Investigative Journalist at the Guardian noted “Tweets have an uncanny ability to find their destination”. So rather than filtering social media to find news-worthy information I’m using social media as a filter for public information and as a crowd sourcing alert system.

I ran this as an experiment, explaining all on my own blog. I was surprised when a certain ScraperWiki star wanted the Twitter bot code so I’ve copied it and taken out all the authorisation keys. You can view it here and use it as a template. This blog explains very clearly how to set things up at the Twitter end. So let’s get your data out into the open (but not spamming). If you make a Twitter bot please let me know the username. You can email me at nicola(at)scraperwiki.com. I’d like to keep tabs of them all in the blog roll.

Oh and the script for getting dates into lexigraphical format (SQL can then order it) is:

import datetime

import dateutil.parser

def parsemonths(d):

d = d.strip()

print d

return dateutil.parser.parse(d, yearfirst=True, dayfirst=True).date()

data['Date'] = parsemonths(data['Date'])

For the opposite, I’ve written a scraper in a tweet before:

https://twitter.com/#!/symroe/status/11083089482

🙂