Face ReKognition

I’ve previously written about social media and the popularity of our Twitter Search and Followers tools. But how can we make Twitter data more useful to our customers? Analysing the profile pictures of Twitter accounts seemed like an interesting thing to do since they are often the faces of the account holder and a face can tell you a number of things about a person. Such as their gender, age and race. This type of demographic information is useful for marketing, and understanding who your product appeals to. It could also be a way of tying together public social media accounts since people like me use the same image across multiple accounts.

Compact digital cameras have offered face recognition for a while, and on my PC, Picasa churns through my photos identifying people in them. I’ve been doing image analysis for a long time, although never before on faces. My first effort at face recognition involved using the OpenCV library. OpenCV provides a whole suite of image analysis functions which do far more than just detect faces. However, getting it installed and working with the Python bindings on a PC was a bit fiddly, documentation was poor and the built-in face analysis capabilities were poor.

Fast forward a few months, and I spotted that someone had cast the ReKognition API over the images that the British Library had recently released, a dataset I’ve been poking around at too. The ReKognition API takes an image URL and a list of characteristics in which you are interested. These include, gender, race, age, emotion, whether or not you are wearing glasses or, oddly, whether you have your mouth open. Besides this summary information it returns a list of feature locations (i.e. locations in the image of eyes, mouth nose and so forth). It’s straightforward to use.

But who should be the first targets for my image analysis? Obviously, the ScraperWiki team! The pictures are quite small but ReKognition identified I was a “Happy, white, male, age 46 with no glasses on and my mouth shut”. Age 46 is a bit harsh – I’m actually 39 in my profile picture. A second target came out “Happy, Indian, male, age 24.7, with glasses on and mouth shut”. This was fairly accurate, Zarino was 25 when the photo was taken, he is male, has his glasses on but is not Indian. Two (male) members of the team, have still not forgiven ReKognition for describing them as female, particularly the one described as a 14 year old.

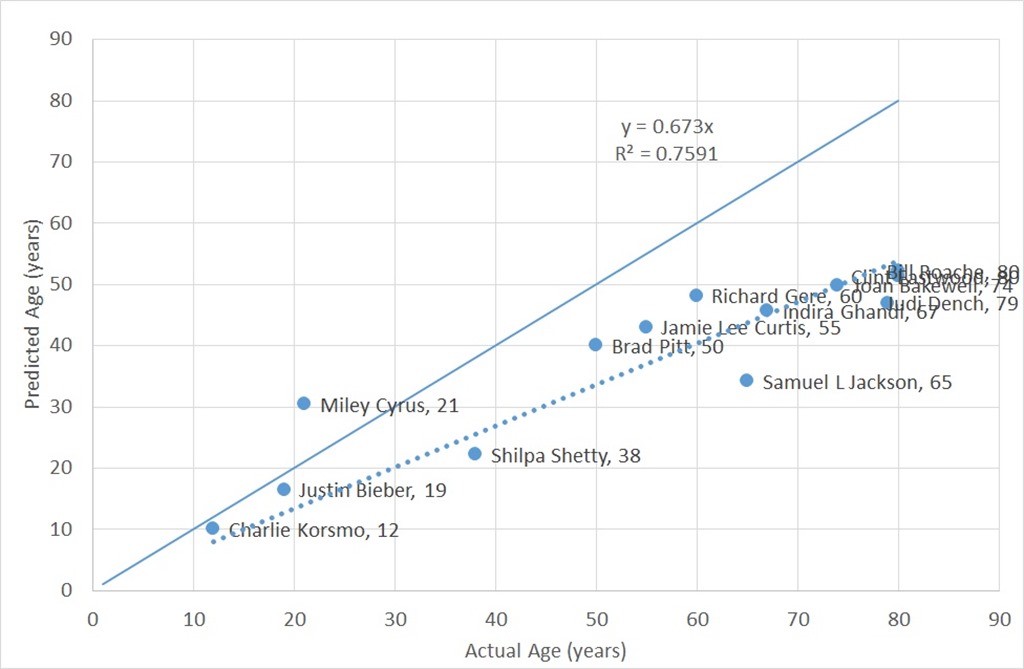

Fun as it was, this doesn’t really count as an evaluation of the technology. I investigated further by feeding in the photos of a whole load of famous people. The results of this are shown in the chart below. The horizontal axis is someone’s actual age, the vertical axis shows their age predicted by ReKognition. If the predictions were correct the points representing the celebrities would fall on the solid line. The dotted line shows a linear regression fit to the data. The equation of the line y = 0.673x (I constrained it to pass through zero) tells us that the age is consistently under-predicted by a third, or perhaps celebrities look younger than they really are! The R2 parameter tells us how good the fit is: a value of 0.7591 is not too bad.

I also tried out ReKognition on a couple of class photos – taken at reunions, graduations and so forth. My thinking here being that I would get a cohort of people aged within a year of each other. These actually worked quite well; for older groups of people I got a standard deviation of only 5 years across a group of, typically, 10 people. A primary school class came out at 16+/-9 years, which wasn’t quite so good. I suspect the performance here is related to the fact that such group photos are taken relatively carefully and the lighting and setup for each face in the photo is, by its nature, the same.

Looking across these experiments: ReKognition is pretty good at finding faces in photos, and not find faces where there are none (about 90% accurate). It’s fairly good with gender (getting it right about 80% of the time, typically struggling a bit with younger children), it detects glasses pretty well. I don’t feel I tested it well on race. On age results are variable, for the ScraperWiki set the R^2 value for linear regression between actual and detected ages is about 0.5. Whilst for famous people it is about 0.75. In both cases it tends to under-estimate age and has never given an age above 55 despite being fed several more mature celebrities and grandparents. So on age, it definitely tells you something and under certain circumstances it can be quite accurate. Don’t forget the images we’re looking at are completely unconstrained, they’re not passport photos.

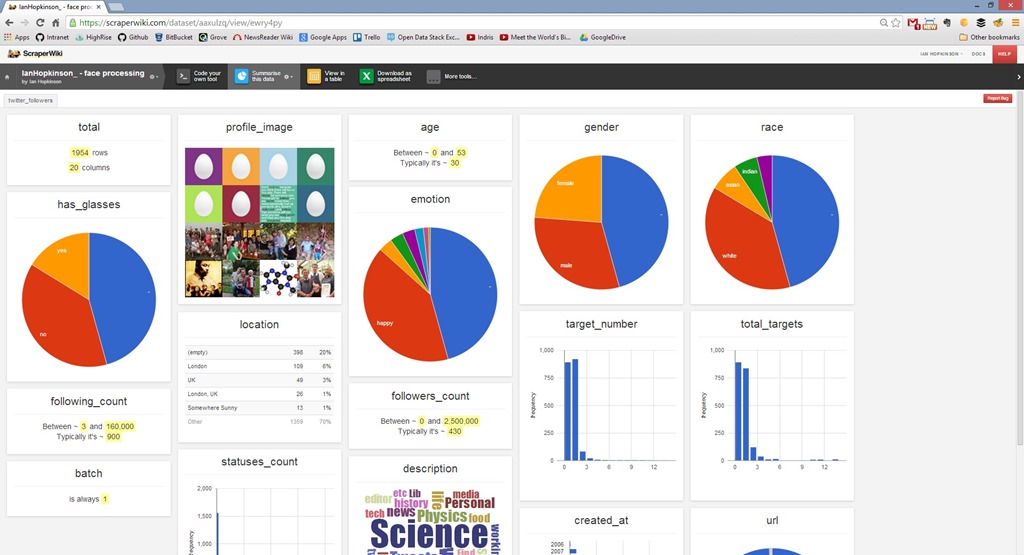

Finally, I applied face recognition to Twitter followers for the ScraperWiki account, and my personal account. The Summarise This Data tool on the ScraperWiki Platform provides a quick overview of the data added by face recognition.

It turns out that a little over 50% of the followers of both accounts have a picture of a human face as their profile picture. It’s clear the algorithm makes the odd error mis-identifying things that are not human faces as faces (including the back of a London Taxi Cab). There’s also the odd sketch or cartoon of a face, rather than a photo and some accounts have pictures of famous people, rather than obviously the account holder. Roughly a third of the followers of either account are identified as wearing glasses, three quarters of them look happy. Average ages in both cases were 30. The breakdown in terms of race is 70:13:11:7 White:Asian:Indian:Black. Finally, my followers are approximately 45% female, and those of ScraperWiki are about 30% female.

We’re now geared up to apply this to lists of Twitter followers – are you interested in learning more about your followers? Then send us an email and we’ll be in touch.

Interesting, I’d also tried OpenCV for face recognition; I’d been running it over my firefox cache, without that much success. I found it fairly easy to get it running and it fairly good at finding faces in images but never found a good way to train it to recognise them.

Ian – Great stuff (as always!)

I just referenced this post in a rather interesting Quora answer that you might enjoy reading. In retrospect, I probably could have just referenced this post and saved myself a lot of typing 🙂

Here’s the Quora question/answer: How can I find Twitter accounts with a lot of female followers aged 18-35.

http://www.quora.com/How-can-I-find-Twitter-Accounts-with-a-lot-of-female-followers-aged-18-35/answer/Matthew-Russell-1

Cool!

I’m thinking there is also analysis you could do on twitter user first names – the US census bureau publish baby first names going back ages, you can use this to determine gender and I think in some cases you can get some hints on age. The popularity of different first names changes over time. We McKinney discusses the dataset in his book “Python for Data Analysis”